How Uber migrated Petabytes of Data with Zero Downtime

Uber migrated off DynamoDB and a blobstore to a ledger-style database. We'll talk about the details.

Hey Everyone!

Today we’ll be talking about

How Uber Migrated Petabytes of Data with Zero Downtime

Uber migrated their payment system from DynamoDB and their internal blobstore to LedgerStore, a ledger-style database

Brief overview of LedgerStore and ledger-style databases

Why Uber is migrating

Checking correctness with Shadow Validation and Offline Validation

Strategies Uber used to backfill data

Tech Snippets

When Is Short Tenure a Red Flag?

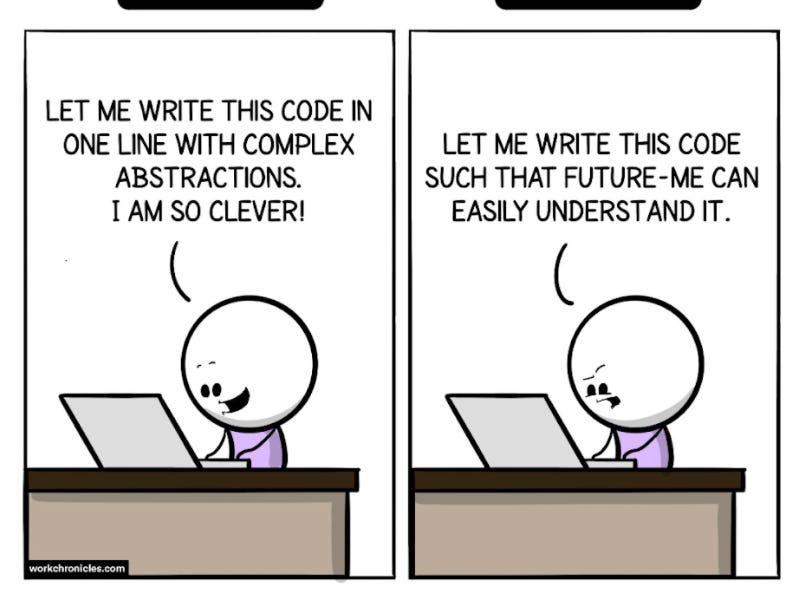

Clever code is probably the worst code you could write

How to Find Great Senior Engineers

Rapidly Solving Sudoku, N-Queens, Pentomino Placement and more with Knuth’s Algorithm X

How Uber Migrated Petabytes of Data with Zero Downtime

Uber is the world’s largest ride-share company with over 10,000 cities on the platform. The company handles over 10 billion trips per year.

For their infrastructure, they use a hybrid of on-prem data centers and cloud services. Uber has deals signed with AWS, Google Cloud, Oracle Cloud and many other major providers.

For their payment platform, Uber previously relied on AWS DynamoDB for their transactional storage (reading/writing payment data that was less than 12 weeks old)

For “cold data” (data older than 12 weeks), they move it to TerraBlob. TerraBlob is an internal blobstore developed at Uber that is similar in design to AWS S3.

Since the launch of Uber’s payment platform in 2017, the company has accumulated petabytes of data on TerraBlob.

In addition to DynamoDB and TerraBlob, Uber also has another internal database called LedgerStore. This is a storage solution that’s been specifically designed for storing business transactions (like payment data).

Recently, Uber has been replacing DynamoDB and TerraBlob with LedgerStore in their payment platform.

Last month, they published a really interesting blog post delving into the migration.

In this article, we’ll give a brief overview of LedgerStore and then talk about how Uber migrated petabytes of data with zero downtime.

Brief Overview of LedgerStore

LedgerStore is an append-only, ledger-style database.

Ledger-style databases are designed to be immutable and easily-auditable.

These types of databases are commonly used for storing financial transactions, healthcare records and other areas where you have to deal with a lot of regulatory compliance.

Some of the specific data integrity guarantees that LedgerStore provides include

Individual records are immutable

Corrections are trackable

There’s security to detect unauthorized data changes

Queries are reproducible a bounded time after write

LedgerStore is built on top of MySQL with Docstore (another internal database created at Uber) as the storage backend.

We’ll be focusing on the data migration part today but you can read about LedgerStore’s architecture here.

Why Migrate to LedgerStore

Uber wanted to replace DynamoDB and TerraBlob with LedgerStore for the following reasons:

LedgerStore Characteristics - LedgerStore’s properties around data integrity make it a much better fit for storing payment data.

Cost Savings - DynamoDB can be very expensive at scale. Uber estimated cost savings of millions of dollars by switching over to LedgerStore.

Simpler Architecture - Previously, Uber used a combination of DynamoDB, TerraBlob and LedgerStore for their payment system. Migrating entirely to LedgerStore would allow them to unify their storage into a single solution.

Improved Performance - LedgerStore is running on-prem within Uber’s data centers. Therefore, using LedgerStore on-prem would result in significantly less network latency compared to DynamoDB on AWS infrastructure.

Uber needed to migrate approximately 2 petabytes of data to LedgerStore.

Checks

The data migration consists of dual writes (writing new data to both the old and new systems) and backfilling data (transfering historical data from the old system to the new system).

However, before the migration, Uber first needed to set up data checks to ensure that the dual writes & backfilling was being done correctly.

Uber wanted the migration to be at least 99.99% complete and correct. They tested for correctness using a combination of shadow validation and offline validation.

Shadow Validation

With shadow validation, you take production traffic and send it to both the old system and the new system. Then, you compare the results from both and make sure they’re the same.

Uber was duplicating incoming read requests to LedgerStore and verifying that the results were the same as DynamoDB/TerraBlob. This also helped them check if LedgerStore could handle the production traffic within their latency requirements.

The issue with shadow validation is that it doesn’t give you strong correctness guarantees for your rarely-access historical data. There just isn’t enough production traffic to do that.

This is where offline validation comes in.

Offline Validation

With offline validation, Uber was comparing the complete data from LedgerStore with the data dumps from DynamoDB and TerraBlob. This helps catch any issues with cold data that is infrequently accessed.

The main challenge is the size of the data. They had to compare petabytes of data with trillions of records.

Uber used Apache Spark for the offline validation and they made use of Spark’s data shuffling feature for distribution processing.

Spark is a highly-scalable, fault-tolerant tool for handling complex data workflows. It allows you to easily split your computations across multiple nodes.

Data shuffling is where data is redistributed across different nodes while ensuring that related data is grouped together (to minimize network requests).

With the data integrity checks setup, Uber could safely backfill data from TerraBlob and DynamoDB to LedgerStore.

Backfilling Strategies

When backfilling the data to LedgerStore, Uber used Apache Spark for the data processing and transformation.

They used several strategies:

Scaling Slowly - retrieving the data for the backfilling places a huge load on the old databases. If you scale too quickly then you can accidentally DDoS your own database. Therefore, you should start the backfill process at a slow rate and scale up in small increments. Monitor closely after each scale-up.

Batching Backfills - when you’re migrating petabytes, it’s unrealistic to expect your backfill job to run from start-to-finish in one go. Therefore, you’ll have to run backfills incrementally. An effective way to do this is to break the backfill into small batches that can be done one by one, where each batch can complete within a few minutes. If your job shuts down in the middle of a batch, you can just restart that batch and you don’t have to worry about missing data.

Rate Control - The rate at which your backfilling data should be tunable. If there’s less production traffic then it makes sense to go faster (and go slower during times of high load). Uber adjusted the rates-per-second of the backfill service using additive-increase/multiplicative-decrease.

Logging - It can be very tempting to set up extensive logging during the backfill to help with debugging and monitoring progress. However, migrating large amounts of data will quickly put a ton of pressure on your logging infrastructure. You should also set up rate-limits with all your migration-logging so you don’t accidentally overwhelm the logging system.