Tech Dive on gRPC

We'll talk about RPCs, gRPC, Pros/Cons, Real World Examples and more. Plus, principles from Deep Work by Cal Newport

Hey Everyone!

Today we’ll be talking about

Tech Dive on gRPC

What is a Remote Procedure Call?

Why use gRPC?

How gRPC works

Cons of gRPC

Communication Patterns

Performance Benchmarks

Real World gRPC (at Uber, Dropbox and Reddit)

Principles from Deep Work by Cal Newport

Deep Work over Shallow Work

Law of the Vital Few

The Two Hour Rule for Deep Work

Zeignarik Effect

Parkinson’s Law

Using Structured Procrasination

Tech Snippets

How Stripe built their Payment Retry System

Why you should hire more junior engineers

Machine Learning Engineering Open Book

Produce, don’t Consume

Introduction to gRPC

gRPC is a framework developed by Google for remote procedure calls. It was open sourced in 2015 and has since become extremely popular outside of Google. It's built on HTTP/2 and uses Google’s Protobuf (Protocol Buffers).

Over the past decade, services-oriented architectures have become more popular as engineering teams have grown (it’s difficult to coordinate 3000 engineers around a single monolith).

As you might imagine, all these services need some way to communicate with each other. Two popular architectural styles that solve this problem are REST (Representative State Transfer) and RPCs (Remote Procedure Calls).

gRPC is a framework developed at Google that is widely used for implementing RPCs.

Note - the g in gRPC does not stand for Google. This is a bit of a running joke; the g stands for something different every release. So far, the g has stood for gizmo, gandalf, godric, gangnam, great, gringotts and more.

In this article, we’ll talk about

What is a Remote Procedure Call?

Why use gRPC?

How gRPC works

Cons of gRPC

Communication Patterns

Performance Benchmarks

Real World gRPC (at Uber, Dropbox and Reddit)

What is a Remote Procedure Call?

A Remote Procedure Call (RPC) is just a way for one application to call a function (procedure) on another application and get a response back.

When you’re writing code in a program, you’re going to be making function calls to other components/services. These function calls can either be local (within the same process) or they can be remote (to another process on the same or a different machine).

When you’re working with a distributed system, RPCs are how your machines communicate with each other.

RPC is a super broad term and there are countless ways to implement a remote procedure call. Examples include

HTTP requests (these can be RESTful or not)

Using the Web Application Messaging Protocol for RPCs over WebSockets

Using RPC frameworks like Apache Thrift, JSON-RPC or gRPC

Asynchronously, by enqueuing messages with RPC instructions onto a queue like Apache Kafka

Using your own custom-made protocol

Basically, any form of “out-of-process” communication is a type of Remote Procedure Call.

The goal of an RPC framework like gRPC is to take away the complexity in making a remote procedure call, making it as easy as calling a local function (that’s the hope at least).

Why use gRPC?

gRPC is used for making remote procedure calls between machines in your system. These can be different microservices in your backend or it can be between a mobile app and your web-server.

You might be wondering, why not use a REST API?

Here’s some of the benefits of gRPC.

Scalability - gRPC uses a binary serialization format (Protocol Buffers). This means significantly smaller message sizes and faster processing times compared to JSON or XML in a REST API.

Strongly Typed - When you’re using Protocol Buffers, you define the structure and types of your messages in a

.protofile (we’ll discuss this more below). You have to define the structure of the data you’re transferring and the types of the fields. This means fewer bugs compared to parsing JSON in a REST API.Bidirectional Streaming - With gRPC, you have built-in support for the request/response paradigm (unary RPC) but you also have support for bidirectional streaming (bidirectional streaming RPC). This flexibility can be very important, especially if you’re using gRPC for communication between backend microservices (where your services can be a lot more chatty).

Cool Built-In Features - gRPC offers built-in features for things like timeouts, deadlines, load balancing, service discovery, load balancing and more. You don’t have to reinvent the wheel when using gRPC for making remote procedure calls.

Comparing gRPC with Apache Thrift

There’s quite a few RPC frameworks out there, so you might wonder why gRPC. Another big alternative is Thrift.

Apache Thrift is a binary communication protocol created at Facebook in 2007. It’s been widely adopted at many places (Uber, Slack, Venmo, etc.) but gRPC is now more popular.

Here’s some of the main differences

Design and Protocol

gRPC follows a more opinionated and structured approach. It uses Protocol Buffers as it’s serialization format and HTTP/2 for the transport protocol.

On the other hand, Thrift is designed to be flexible. For serializing data, Thrift provides a binary format but you can also use JSON, BSON and others. For transferring data over the network, you can use HTTP/2, HTTP/1, WebSockets or even custom transport protocols.

Language Support and Community

Both gRPC and Thrift support a wide range of languages. There’s libraries for C++, Java, Python, Go, PHP and more.

In terms of the community, Thrift has a longer history (Thrift was open-sourced in 2007 whereas gRPC was open-sourced in 2015) and is widely used at many tech companies.

However, gRPC has gained significant traction and has a rapidly growing community. There’s a ton of great support with tooling like Nginx, Kubernetes, Istio, Envoy and more.

Performance

Latency depends on the specific use case, network conditions and configuration. Both frameworks have been used successfully in high-performance, low-latency settings.

Reddit switched from Thrift to gRPC for performance reasons (among others) with excessive memory usage being one issue. However, most of the problems revolved around the transport protocol. We’ll delve into this in the Real World Applications section.

This is the first part of our tech dive on gRPC. The entire article is 10+ pages and 3000+ words.

In the rest of the article, we’ll delve into how you use gRPC, communication patterns, cons, performance benchmarks and real world case studies at companies like Uber, Reddit and Dropbox.

We’ll be sending the full article out tomorrow.

You can get the full article (plus 20+ other tech dives and spaced-repetition flash cards on all the content in Quastor) by subscribing to Quastor Pro here.

Tech Snippets

Subscribe to Quastor Pro for long-form articles on concepts in system design and backend engineering.

You’ll also get spaced-repetition (Anki) flash cards on all the past content in Quastor!

Past article content includes

System Design Concepts

| Tech Dives

|

Principles from Deep Work by Cal Newport

Cal Newport is an associate CS professor at Georgetown University where he researches distributed algorithms.

Since 2007, he's been writing Study Hacks where he shares his tips on being productive in the digital age. He published a fantastic book on his strategies titled "Deep Work: Rules for Focused Success in a Distracted World".

The book really helped me change my mindset around productivity and what I view as important work.

In this post, I'll go through several principles from Deep Work and give brief summaries of each one.

If you’d like to learn more, I’d highly recommend purchasing the book.

Deep Work over Shallow Work

Deep work is where you're focusing intensely on a cognitively demanding task without any interruptions. This would be where you're searching for a bug, writing a function, reading documentation, etc.

On the other hand, shallow work is where you're doing tasks that aren't cognitively demanding. Paying bills, reading email, scrolling through your Twitter feed are all examples of shallow work.

The rewards of deep work are usually far greater than shallow work. Work that society values (art, discovery, innovation, etc.) is almost always the result of deep work.

Additionally, doing deep work can be a lot more fun than doing shallow work. With deep work, it's easier to get into a flow state.

For this reason, Cal recommends minimizing/delegating shallow work whenever you can.

Two Hour Rule

The Two Hour Rule suggests that deep work sessions should be no less than two hours long. That's usually the amount of time it takes to get into flow and achieve something productive.

For sessions less than two hours, you might not have enough time to properly get into flow. And if you do get into flow, then your session might end before you've achieved anything useful.

Zeigarnik Effect

The Zeigarnik effect states that incomplete or interrupted tasks tend to stay top of mind, far more than completed tasks.

You can use this effect to be productive. When you have a large project, you should always break it down into smaller, manageable parts. In other words, you should create many "unfinished tasks". These tasks will stay top of mind because of the Zeigarnik effect and will help you avoid procrastinating.

Another strategy is to start with something easy. Tackle the easiest or most enjoyable task first. This starts the "open loop" in your mind of the other tasks you need to get done and helps nudge you into creating momentum.

Parkinson’s Law

Parkinson's Law states that work expands to fill the time available for its completion. If you give yourself a week to complete a task that can be done in a day, then you'll find ways to stretch that 1-day long task into a week's worth of work.

You can take advantage of Parkinson’s law by setting ambitious deadlines. This helps you stay on track and limit the scope of the task.

Structured Procrastination

Have you ever found yourself procrastinating on important tasks by doing less important work? You might have an important deadline for a project coming up in a few hours and instead, you're spending this time answering emails.

Structured procrastination is where you take advantage of this tendency to get other less-important tasks done while avoiding your main priority.

You prioritize your tasks based on their importance and level of difficulty. Then, you structure your day so that you tackle the most important tasks first. Whenever you need a break, you can take a low-difficulty/low-priority task from your to-do list and tackle that.

This helps you maintain a sense of accomplishment and progress even if you're taking a break from your main priority.

These are just a few of the principles from the book. I'd highly recommend checking it out if you're interested in learning more.

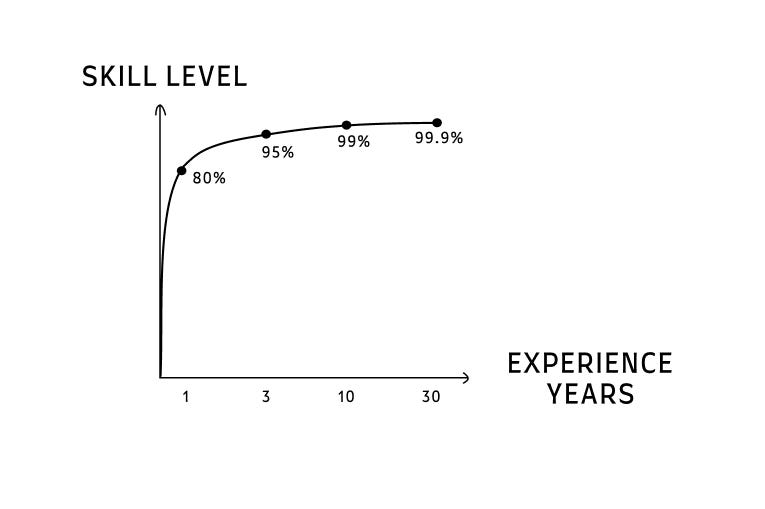

Law of the Vital Few

This law is also known as the Pareto Principle or the 80/20 rule.

It's an observation that for many areas of life, 80% of the results tend to come from 20% of the work. In other words, a small portion of your total efforts often contribute to the majority of your results.

As a developer, a small fraction of your 40-hour work week (30-40% if you're lucky) is actually spent in flow where you're making significant progress on your projects.

Cal suggests that you work on protecting and expanding this time.