Tech Dive on Apache Kafka

A long-form tech dive on Kafka, it's design goals, components, messaging patterns and more. Plus, how to avoid pitfalls when choosing your tech stack, scaling a large codebase and more!

Hey Everyone!

Today we’ll be talking about

Apache Kafka Tech Dive - We’ve talked about Kafka a ton in past Quastor summaries, so this article will be a long form tech dive into Kafka and it’s features

Message Queues vs. Publish/Subscribe

Tooling in the Message Queue and Pub/Sub space

Origins of Kafka and original design goals at LinkedIn

Purpose of Producers, Brokers and Consumers

Kafka Messaging Patterns

The Kafka Ecosystem

Criticisms of Kafka and their solutions

Scaling Kafka at Big Tech companies

How Faire Scaled Their Engineering Team to Hundreds of Developers

Hiring developers who are customer focused

Implementing code review and testing early on

Tracking KPIs around developer velocity

Factors that make a good KPI

Tech Snippets

The New Edition of Eloquent JavaScript (awesome, free book to learn JS)

How to Avoid Pitfalls when choosing your Tech Stack

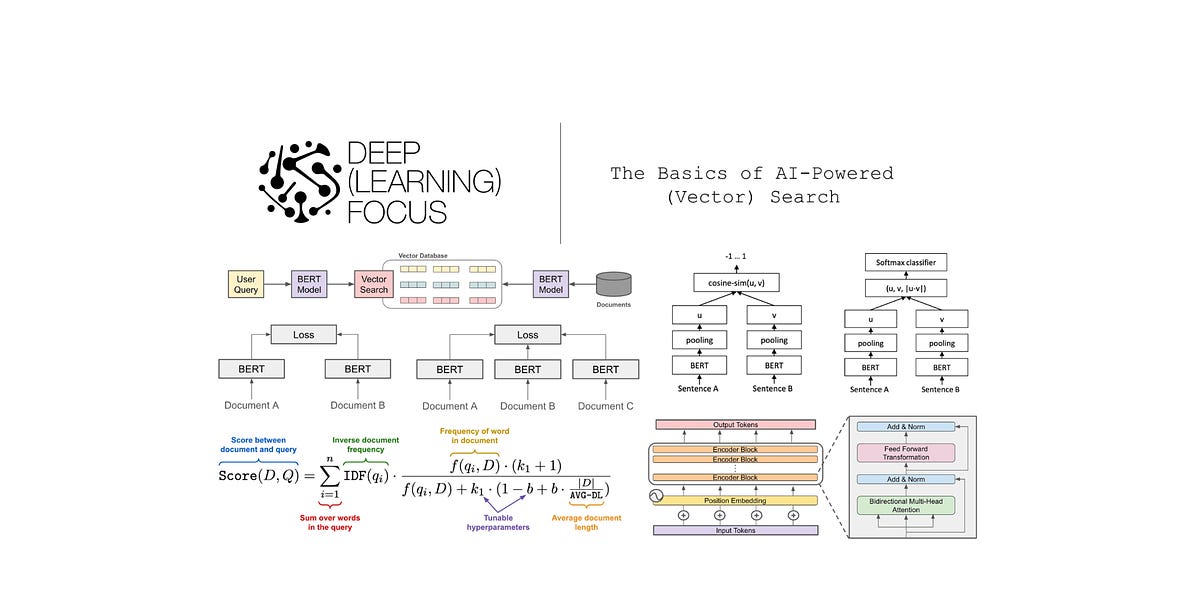

The Basics of AI-Powered (Vector Search)

How to Scale a Large Codebase

How (not) to Apply for a Software Job

How Engineering Leaders Stay Up to Date

Tech Dive - Apache Kafka

With the rise of Web 2.0 (websites like Facebook, Twitter, YouTube and LinkedIn with user-generated content), engineers needed to build highly scalable backends that can process petabytes of data.

To do this, Asynchronous Communication has become an essential design pattern. You have backend services (publishers) that are creating data objects and then you have other backend services (consumers) that use these objects. With an asynchronous system, you add in a buffer that stores objects generated by the producers until they can be processed by the downstream services (consumers).

Tools that help you with this are message queues like RabbitMQ and event streaming platforms like Apache Kafka. The latter has grown extremely popular over the last decade.

In this article, we’ll be delving into Kafka. We’ll talk about it’s architecture, usage patterns, criticisms, ecosystem and more.

Message Queue vs. Publish/Subscribe

With asynchronous communication, you need some kind of buffer to sit in-between the producers and the consumers. Two commonly used types of buffers are message queues and publish-subscribe systems. We’ll delve into both and talk about their differences.

A traditional message queue sits between two components: the producer and the consumer.

The producer produces events at a certain rate and the consumer processes these events at a different rate. The mismatch in production/processing rates means we need a buffer in-between these two to prevent the consumer from being overwhelmed. The message queue serves this purpose.

With a message queue, each message is consumed by a single consumer that listens to the queue.

With Publish/Subscribe systems, you can have each event get processed by multiple consumers who are listening to the topic. So it’s essential if you want multiple consumers to all get the same messages from the producer.

Pub/Sub systems introduce the concept of a topic, which is analogous to a folder in a filesystem. You’ll have multiple topics (to categorize your messages) and you can have multiple producers writing to each topic and multiple consumers reading from a topic.

Pub/Sub is an extremely popular pattern in the real world. If you have a video sharing website, you might publish a message to Kafka whenever a new video gets submitted. Then, you’ll have multiple consumers subscribing to those messages: a consumer for the subtitles-generator service, another consumer for the piracy-checking service, another for the transcoding service, etc.

Possible Tools

In the Message Queue space, there’s a plethora of different tools like

AWS SQS - AWS Simple Queue Service is a fully managed queueing service that is extremely scalable.

RabbitMQ - open source message broker that can scale horizontally. It has an extensive plugin ecosystem so you can easily support more message protocols, add monitoring/management tooling and more.

ActiveMQ - an open source message broker that’s highly scalable and commonly used in Java environments. (It’s written in Java)

In the Publish/Subscribe space, you have tools like

Apache Kafka - Kafka is commonly referred to as an event-streaming service (an implementation of Pub/Sub). It’s designed to be horizontally scalable and transfer events between hundreds of producers and consumers.

Redis Pub/Sub - Part of Redis (an in-memory database). It’s extremely simple and very low latency so it’s great if you need extremely high throughput.

AWS SNS - AWS Simple Notification Service is a fully managed Pub/Sub service by AWS. Publishers send messages to a topic and Consumers can subscribe to specific SNS topics through HTTP (and other Amazon services).

Now, we’ll delve into Kafka.

Origins of Kafka

In the late 2000s, LinkedIn needed a highly scalable, reliable data pipeline for intaking data from their user-activity tracking system and their monitoring system. They looked at ActiveMQ, a popular message queue, but it couldn’t handle their scale.

In 2011, Jay Kreps and his team worked on Kafka, a messaging platform to solve this issue. Their goals were around

Decoupling - Multiple producers and consumers should be able to push/pull messages from a topic. The system needed to follow a Pub/Sub model.

High Throughput - The system should allow for easy horizontal scaling of topics so it could handle a high number of messages

Reliability - The system should provide message deliverability guarantees. Data should also be written to disk and have multiple replicas to provide durability.

Language Agnostic - Messages are byte arrays so you can use JSON or a data format like Avro.

How Kafka Works

A Kafka system consists of several components

Producer - Any service that pushes messages to Kafka is a producer.

Consumer - Any service that consumes (pulls) messages from Kafka is a consumer.

Kafka Brokers - the kafka cluster is composed of a network of nodes called brokers. Each machine/instance/container running a Kafka process is called a broker.

Producer

A Producer publishes messages ( also known as events) to Kafka. Messages are immutable and have a timestamp, a value and optional key/headers. The value can be any format, so you can use XML, JSON, Avro, etc. Typically, you’ll want to keep message sizes small (1 mb is the default max size).

Messages in Kafka are stored in topics, which are the core structure for organizing and storing your messages. You can think of a topic as a log file that stores all the messages and maintains their order. New message are appended to the end of the topic.

If you’re using Kafka to store user activity logs, you might have different topics for clicks, purchases, add-to-carts, etc. Multiple producers can write to a single Kafka topic (and multiple consumers can read from a single topic)

When a producer needs to send messages to Kafka, they send the message to the Kafka Broker.

Kafka Broker

In your Kafka cluster, the individual servers are referred to as brokers.

Brokers will handle

Receiving Messages - accepting messages from producers

Storing Messages - storing them on disk organized by topic. Each message has a unique offset identifying it.

Serving Messages - sending messages to consumer services when the consumer requests it

If you have a large backend with thousands of producers/consumers and billions of messages per day, having a single Kafka broker is obviously not going to be sufficient.

Kafka is designed to easily scale horizontally with hundreds of brokers in a single Kafka cluster.

Kafka Scalability

As we mentioned, your messages in Kafka are split into topics. Topics are the core structure for organizing and storing your messages.

In order to be horizontally scalable, each topic can be split up into partitions. Each of these partitions can be put on different Kafka brokers so you can split a specific topic across multiple machines.

You can also create replicas of each partition for fault tolerance (in case a machine fails). Replicas in Kafka work off a leader-follower model, where one partition becomes the leader and handles all reads/writes. The follower nodes passively replicate data from the leader for redundancy purposes. Kafka also has a feature to allow these replica partitions to serve reads.

Important Note - when you split up a Kafka topic into partitions, your messages in the topic are ordered within each partition but not across partitions. Kafka guarantees the order of messages at the partition-level.

Choosing the right partitioning strategy is important if you want to set up an efficient Kafka cluster. You can randomly assign messages to partitions, use round-robin or use some custom partitioning strategy (where you specify which partition in the message).

Data Storage and Retention

Kafka Brokers are responsible for writing messages to disk. Each broker will divide its data into segment files and store them on disk. This structure helps brokers ensure high throughput for reads/writes. The exact mechanics of how this works is out of scope for this article, but here’s a fantastic dive on Kafka’s storage internals.

For data retention, Kafka provides several options

Time-based Retention - Data is retained for a specific period of time. After that retention period, it is eligible for deletion. This is the default policy with Kafka (with a 7 day retention period).

Size-based Retention - Data is kept up to a specified size limit. Once the total size of the stored data exceeds this limit, the oldest data is deleted to make room for new messages.

This is the first part of our Tech Dive on Apache Kafka.

The entire article is 10+ pages and 3000+ words.

In the rest of the article, we’ll delve into Kafka Consumers, Messaging Patterns, the Kafka Ecosystem, Criticisms, Scaling Kafka at Big Tech companies and more.

You can get the full article (20+ other tech dives) by subscribing to Quastor Pro here.

Tech Snippets

Subscribe to Quastor Pro for long-form articles on concepts in system design and backend engineering.

Past article content includes

System Design Concepts

| Tech Dives

|

When you subscribe, you’ll also get Spaced Repetition Flashcards for reviewing all the main concepts discussed in prior Quastor articles.

How Faire Maintained Engineering Velocity as They Scaled

Faire is an online marketplace that connects small businesses with makers and brands. A wide variety of products are sold on Faire, ranging from home decor to fashion accessories and much more. Businesses can use Faire to order products they see demand for and then sell them to customers.

Faire was started in 2017 and became a unicorn (valued at over $1 billion) in under 2 years. Now, over 600,000 businesses are purchasing wholesale products from Faire’s online marketplace and they’re valued at over $12 billion.

Marcelo Cortes is the co-founder and CTO of Faire and he wrote a great blog post for YCombinator on how they scaled their engineering team to meet this growth.

Here’s a summary

Faire’s engineering team grew from five engineers to hundreds in just a few years. They were able to sustain their pace of engineering execution by adhering to four important elements.

Hiring the right engineers

Building solid long-term foundations

Tracking metrics for decision-making

Keeping teams small and independent

Hiring the Right Engineers

When you have a huge backlog of features/bug fixes/etc that need to be pushed, it can be tempting to hire as quickly as possible.

Instead, the engineering team at Faire resisted this urge and made sure new hires met a high bar.

Specific things they looked for were…

Expertise in Faire’s core technology

They wanted to move extremely fast so they needed engineers who had significant previous experience with what Faire was building. The team had to build a complex payments infrastructure in a couple of weeks which involved integrating with multiple payment processors, asynchronous retries, processing partial refunds and a number of other features. People on the team had previous experience building the same infrastructure for Cash App at Square, so that helped tremendously.

Focused on Delivering Value to Customers

When hiring engineers, Faire looked for people who were amazing technically but were also curious about Faire’s business and were passionate about entrepreneurship.

The CTO would ask interviewees questions like “Give me examples of how you or your team impacted the business”. Their answers showed how well they understood their past company’s business and how their work impacted customers.

A positive signal is when engineering candidates proactively ask about Faire’s business/market.

Having customer-focused engineers made it much easier to shut down projects and move people around. The team was more focused on delivering value for the customer and not wedded to the specific products they were building.

Build Solid Long-Term Foundations

From day one, Faire documented their culture in their engineering handbook. They decided to embrace practices like writing tests and code reviews (contrary to other startups that might solely focus on pushing features as quickly as possible).

Faire found that they operated better with these processes and it made onboarding new developers significantly easier.

Here’s four foundational elements Faire focused on.

Being Data Driven

Faire started investing in their data engineering/analytics when they were at just 10 customers. They wanted to ensure that data was a part of product decision-making.

From the start, they set up data pipelines to collect and transfer data into Redshift (their data warehouse).

They trained their team on how to use A/B testing and how to transform product ideas into statistically testable experiments. They also set principles around when to run experiments (and when not to) and when to stop experiments early.

Choosing Technology

For picking technologies, they had two criteria

The team should be familiar with the tech

There should be evidence from other companies that the tech is scalable long term

They went with Java for their backend language and MySQL as their database.

Writing Tests

Many startups think they can move faster by not writing tests, but Faire found that the effort spent writing tests had a positive ROI.

They used testing to enforce, validate and document specifications. Within months of code being written, engineers will forget the exact requirements, edge cases, constraints, etc.

Having tests to check these areas helps developers to not fear changing code and unintentionally breaking things.

Faire didn’t focus on 100% code coverage, but they made sure that critical paths, edge cases, important logic, etc. were all well tested.

Code Reviews

Faire started doing code reviews after hiring their first engineer. These helped ensure quality, prevented mistakes and spread knowledge.

Some best practices they implemented for code reviews are

Be Kind. Use positive phrasing where possible. It can be easy to unintentionally come across as critical.

Don’t block changes from being merged if the issues are minor. Instead, just ask for the change verbally.

Ensure code adheres to your internal style guide. Faire switched from Java to Kotlin in 2019 and they use JetBrains’ coding conventions.

Track Engineering Metrics

In order to maintain engineering velocity, Faire started measuring this with metrics at just 20 engineers.

Some metrics they started monitoring are

CI wait time

Open Defects

Defects Resolution Time

Flaky tests

New Tickets

And more. They built dashboards to monitor these metrics.

As Faire grew to 100+ engineers, it no longer made sense to track specific engineering velocity metrics across the company.

They moved to a model where each team maintains a set of key performance indicators (KPIs) that are published as a scorecard. These show how successful the team is at maintaining its product areas and parts of the tech stack it owns.

As they rolled this process out, they also identified what makes a good KPI.

Here are some factors they found for identifying good KPIs to track.

Clearly Ladders Up to a Top-Level Business Metric

In order to convince other stakeholders to care about a KPI, you need a clear connection to a top-level business metric (revenue, reduction in expenses, increase in customer LTV, etc.). For tracking pager volume, Faire saw the connection as high pager volume leads to tired and distracted engineers which leads to lower code output and fewer features delivered.

Is Independent of other KPIs

You want to express the maximum amount of information with the fewest number of KPIs. Using KPIs that are correlated with each other (or measuring the same underlying thing) means that you’re taking attention away from another KPI that’s measuring some other area.

Is Normalized In a Meaningful Way

If you’re in a high growth environment, looking at the KPI can be misleading depending on the denominator. You want to adjust the values so that they’re easier to compare over time.

Solely looking at the infrastructure cost can be misleading if your product is growing rapidly. It might be alarming to see that infrastructure costs doubled over the past year, but if the number of users tripled then that would be less concerning.

Instead, you might want to normalize infrastructure costs by dividing the total amount spent by the number of users.

This is a short summary of some of the advice Marcelo offered. For more lessons learnt (and additional details) you should check out the full blog post here.