Measuring Availability - System Design Series

How to think about Availability, Latency and other metrics for your system. Plus, the architecture of a Meme search engine, how database query planners work and more.

Hey Everyone!

Today we’ll be talking about

Measuring Availability in System Design

SLAs, SLOs and SLIs

Measuring Availability with Nines

Measuring Latency with Averages and Percentiles

MTTR, MTBM, RPO

Tech Snippets

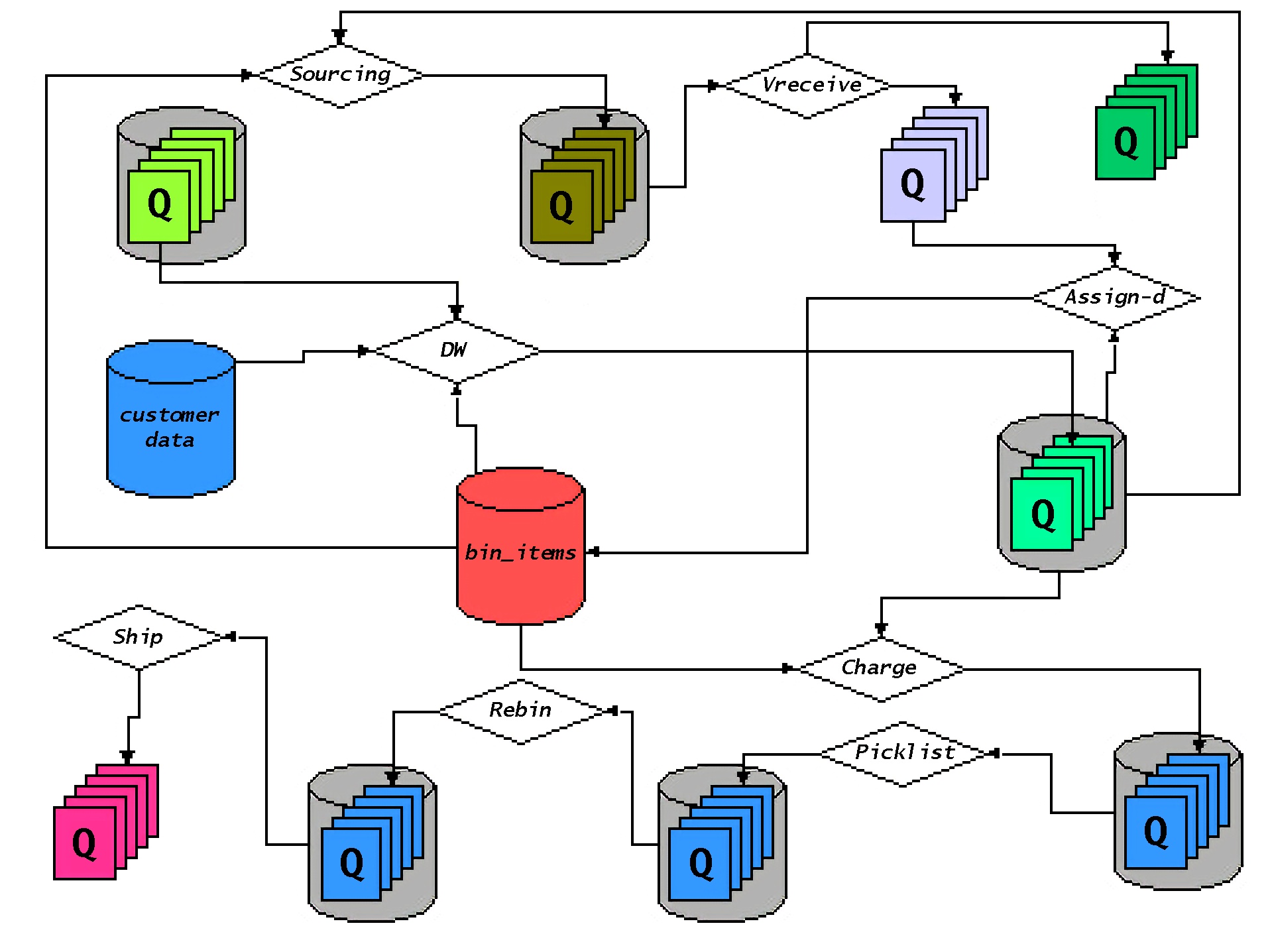

Amazon’s Distributed Computing Manifesto

A Gentle Introduction to CRDTs

Building a Meme Search Engine

How Database Query Planners work

How Google Manages their Monorepo

What is Backoff For?

Measuring Availability

When you’re building a system, an incredibly important consideration you’ll have to deal with is availability.

You’ll have to think about

What availability guarantees do you provide to your end users

What availability guarantees do dependencies you’re using provide to you

These availability goals will affect how you design your system and what tradeoffs you make in terms of redundancy, autoscaling policies, message queue guarantees, and much more.

Service Level Agreement

Availability guarantees are conveyed through a Service Level Agreement (SLAs). Services that you use will provide one to you and you might have to give one to your end users (either external users or other developers in your company who rely on your API).

Here’s some examples of SLAs

These SLAs provide monthly guarantees in terms of Nines. We’ll discuss this shortly. If they don’t meet their availability agreements, then they’ll refund a portion of the bill.

Service Level Agreements are composed of multiple Service Level Objectives (SLOs). An SLO is a specific target level objective for the reliability of your service.

Examples of possible SLOs are

be available 99.9% of the time, with a maximum allowable downtime of ~40 minutes per month.

respond to requests within 100 milliseconds on average, with no more than 1% of requests taking longer than 200 milliseconds to complete (P99 latency).

handle 1,500 requests per second during peak periods, with a maximum allowable response time of 200 milliseconds for 99% of requests.

SLOs are based on Service Level Indicators (SLI), which are specific measures (indicators) of how the service is performing.

The SLIs you set will depend on the service that you’re measuring. For example, you might not care about the response latency for a batch logging system that collects a bunch of logging data and transforms it. In that scenario, you might care more about the recovery point objective (maximum amount of data that can be lost during the recovery from a disaster) and say that no more than 12 hours of logging data can be lost in the event of a failure.

The Google SRE Workbook has a great table of the types of SLIs you’ll want depending on the type of service you’re measuring.

Availability

Every service will need a measure of availability. However, the exact definition will depend on the service.

You might define availability using the SLO of "successfully responds to requests within 100 milliseconds". As long as the service meets that SLO, it'll be considered available.

Availability is measured as a proportion, where it’s time spent available / total time. You have ~720 hours in a month and if your service is available for 715 of those hours then your availability is 99.3%.

It is usually conveyed in nines, where the nine represents how many 9s are in the proportion.

If your service is available 92% of the time, then that’s 1 nine. 99% is two nines. 99.9% is three nines. 99.99% is four nines, and so on. The gold standard is 5 Nines of availability, or available at least 99.999% of the time.

When you talk about availability, you also need to talk about the unit of time that you’re measuring availability in. You can measure your availability weekly, monthly, yearly, etc.

If you’re measuring it weekly, then you give an availability score for the week and that score resets every week.

If you measure downtime monthly, then you must have less than 40 minutes of downtime in a given month to have 3 Nines.

So, let’s say it’s the week of May 1rst and you have 5 minutes of downtime that week.

After, for the week of May 8th, your service has 30 seconds of downtime.

Then you’ll have 3 Nines of availability for the week of May 1rst and 4 Nines of availability for the week of May 8th.

However, if you were measuring availability monthly, then the moment you had that 5 minutes of downtime in the week of May 1rst, your availability for the month of May would’ve been at most 3 Nines. Having 4 Nines means less than 4 minutes, 21 seconds of downtime, so that would’ve been impossible for the month.

Here’s a calculator that shows daily, weekly, monthly and yearly availability calculations for the different Nines.

Most services will measure availability monthly.At the end of every month, the availability proportion will reset.

You can read more about choosing an appropriate time window here in the Google SRE workbook.

Latency

An important way of measuring the availability of your system is with the latency. You’ll frequently see SLOs where the system has to respond within a certain amount of time in order to be considered available.

It’s important to distinguish between the latency of a successful request vs. an unsuccessful one. For example, if your server has some configuration error (or some other error), it might immediately respond to any HTTP request with a 500. Computing these latencies with your successful responses will throw off the calculation.

There’s different ways of measuring latency, but you’ll commonly see two ways

Averages - Take the mean or median of the response times. If you’re using the mean, then tail latencies (extremely long response times due to network congestion, errors, etc.) can throw off the calculation.

Percentiles - You’ll frequently see this as P99 or P95 latency (99th percentile latency or 95th percentile latency). If you have a P99 latency of 200 ms, then 99% of your responses are sent back within 200 ms.

Latency will typically go hand-in-hand with throughput, where throughput measures the number of requests your system can process in a certain interval of time (usually measured in requests per second). As the requests per second goes up, the latency will go up as well. If you have a sudden spike in requests per second, users will experience a spike in latency until your backend’s autoscaling kicks in and you get more machines added to the server pool.

You can use load testing tools like JMeter, Gatling and more to put your backend under heavy stress and see how the average/percentile latencies change.

The high percentile latencies (have a latency that is slower than 99.9% or 99.99% of all responses) might also be important to measure, depending on the application. These latencies can be caused by network congestion, garbage collection pauses, packet loss, contention, and more.

To track tail latencies, you’ll commonly see histograms and heat maps utilized.

Other Metrics

There’s an infinite number of other metrics you can track, depending on what your use case is. Your customer requirements will dictate this.

Some other examples of commonly tracked SLOs are MTTR, MTBM and RPO.

MTTR - Mean Time to Recovery measures the average time it takes to repair a failed system. Given that the system is down, how long does it take to become operational again? Reducing the MTTR is crucial to improving availability.

MTBM - Mean Time Between Maintenance measures the average time between maintenance activities on your system. Systems may have scheduled downtime (or degraded performance) for maintenance activities, so MTBM measures how often this happens.

RPO - Recovery Point Objective measures the maximum amount of data that a company can lose in the event of a disaster. It’s usually measured in time and it represents the point in time when the data must be restored in order to minimize business impact. If a company has an RPO of 2 hours, then that means that the company can tolerate the loss of data up to 2 hours old in the event of a disaster. RPO goes hand-in-hand with MTTR, as a short RPO means that the MTTR must also be very short. If the company can’t tolerate a significant loss of data when the system goes down, then the Site Reliability Engineers must be able to bring the system back up ASAP.