How Uber Built an Exabyte-Scale System for Data Processing

We'll talk about data processing at Uber and how they revamped their ETL platform to make it modular and scalable. Plus, software testing anti-patterns and how to get better at finishing your side projects.

Hey Everyone!

Today we’ll be talking about

The Architecture of Uber’s ETL Platform

Introduction to ETL

Tools used for ETL

Architecture of Sparkle, Uber’s ETL framework built on Apache Spark

Tech Snippets

Software Testing Anti-Patterns

Free University Courses for Learning CS

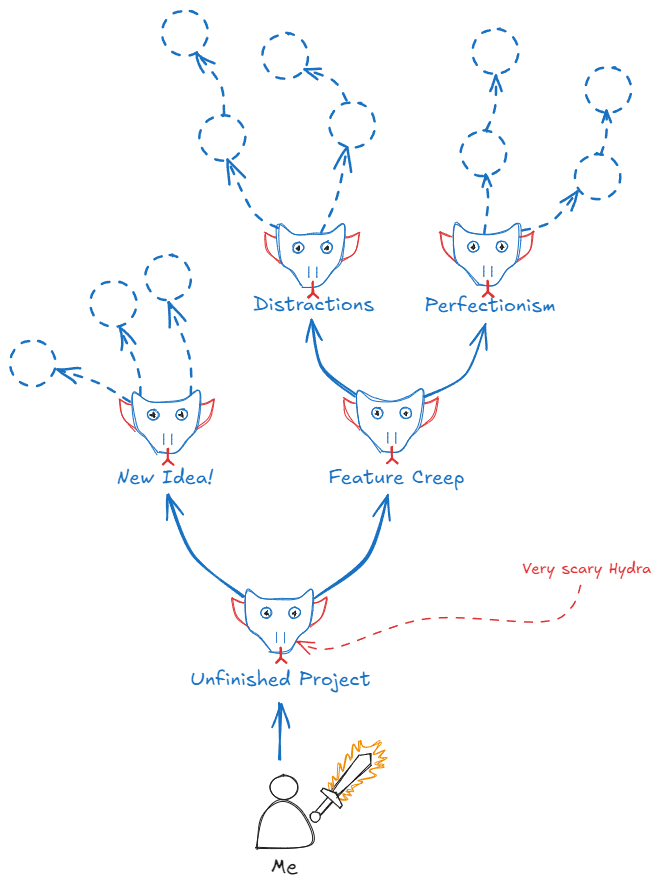

How to Get Better at Finishing Your Side Projects

15 Life and Work Principles from Jensen Huang (CEO of Nvidia)

Building product integrations is no small feat—balancing timelines, resources, and technical complexity can feel overwhelming.

Should you build integrations in-house, or is it better to leverage third-party solutions?

Nango wrote a fantastic, in-depth guide that walks you through everything you need to know to make an informed choice. They talk about the trade-offs involved and offer practical tips to make your decision easier.

In the guide, you’ll learn:

Key Considerations - Understand the costs, risks, and benefits of building vs. buying integrations.

When to Build - Discover scenarios where in-house development gives you the most control and flexibility.

When to Buy - Learn when leveraging pre-built solutions can accelerate timelines and reduce maintenance overhead.

sponsored

The Architecture of Uber’s ETL Platform

Uber is the largest ride sharing company in the world with over 150 million monthly active users and approximately 25 million daily trips.

With this scale comes a huge amount of data (and a cloud-bill that’s larger than the GDP of a small island nation). Uber generates petabytes of data daily from ride history, logs, payment transactions, etc.

This data needs to be extracted from the various data sources (payment processor, OLTP database, logs, etc.) and then loaded into data warehouses, data lakes, machine learning platforms and more.

To do this, Uber relies on ETL (Extract, Transform, Load) processes. The Uber engineering team published a terrific blog post talking about exactly how they handle ETL at their scale. They have 20,000+ critical data pipelines and 3,000+ engineers who use this system.

Introduction to ETL

Extract, Transform, Load (ETL) is the process where you

Extract - you extract data from the various data sources (places where data is created/temporarily stored). This can be a transactional database, payment processor, a CRM (Salesforce or HubSpot), message queue, etc.

Transform - you clean, validate and standardize the data. You might need to check for duplicates, handle missing values, join the data with another dataset and more.

Load - you load the data into various data sinks. This can be a data warehouse like Google BigQuery, a data lake like HDFS, archival storage like Amazon Glacier or something else.

Building data pipelines for ETL can be quite painful. You’ll need to consider several things:

Data Integrity - ensure the accuracy and consistency in your data. You’ll need to check for duplicate records, missing values, inconsistent formatting, outlier values and more.

Schema Evolution - as business requirements change, the data you’ll be processing will change. You’ll need to account for new fields, data type changes, deprecated fields, etc.

Monitoring/Debugging - you’ll need logging for the different stages of your pipeline and real-time alerts for failures/performance issues so you minimize downtime (and don’t lose data)

Scalability - the pipeline shouldn’t require a complete re-architecture as your data volume grows. You may also have to deal with bursts in incoming data depending on the usage patterns.

Reliability and Failure Recovery - For some systems, you might need to guarantee at least once processing. You’ll have to make sure that the system rarely goes down and that you have a process in place to minimize data loss in case of crashes.

Compliance - you might have to consider internal data governance/privacy policies when doing transformations.

Some common tools used for ETL include

Apache Spark - an open-source engine for large-scale data processing. It’s a great choice for complex ETL jobs at scale.

dbt (data build tool) - a toolkit for building data pipelines that encourages software engineering best practices like version control,testing, code review and more.

Apache Airflow - a popular open-source platform for orchestrating workflows. You can schedule, monitor and manage your ETL pipelines with Python.

AWS Glue - fully managed, serverless ETL service from Amazon.

Google Cloud Dataflow - fully managed service for ETL from Google Cloud

In 2023, Uber migrated all their batch workloads to Apache Spark. Recently, they built Sparkle, a framework on top of Apache Spark with the goal of simplifying data pipeline development and testing.

ETL at Uber with Sparkle

As your ETL jobs get more and more complex, it becomes crucial to use software engineering best practices when writing/maintaining them. Observability, version control, testing, documentation, etc. are a couple of best practices that have become increasingly adopted in the data community.

Leading this charge is dbt, a data engineering platform that helps you apply these best practices to your data transformations.

However, at Uber, switching from Spark to an entirely new ETL tool wasn’t possible. The scale of Uber’s platform, the developer learning curve and investment required just wasn’t worth it. (try telling your boss you need to rewrite 20,000 mission-critical data pipelines)

Instead, the Uber team decided to build Sparkle, a framework on top of Apache Spark that lets engineers write configuration-based modular ETL jobs. Sparkle added features for observability, testing, data lineage tracking and more.

The core idea behind Sparkle is modularity. Rather than writing complex, monolithic Spark jobs, engineers break their ETL logic down into a series of smaller, reusable modules. Each module can be in SQL, Java/Scala or Python and they’re defined with YAML. Check the blog post for samples of what Sparkle jobs look like.

Developers can just focus on the business logic around their data pipeline. Sparkle will handle infrastructure and boilerplate with pre-built components like

Connectors - handles all the connection details to pull data from all the various data sources at Uber

Readers/Writers - handles translating data into different formats like Parquet, JSON, Avro, etc.

Observability - provides logging, metrics and data lineage tracking

Testing - you can write unit tests for your modules using mock data and SQL assertions to make sure your transformations are doing what you expect.

Stop wasting weeks wrestling with Salesforce's API.

Nango wrote a terrific guide showing how you can build a robust Salesforce integration in just 3 hours.

This guide breaks down the entire process with actionable tips and insights that’ll save your team dozens of hours.

sponsored