How LinkedIn Scaled Their System to 5 Million Queries Per Second

How LinkedIn used BitSets, Bloom Filters, Caching Strategies and more to Scale their Safety system to 5 million queries per second. Plus, questions you'll get asked frequently as an engineering manager and tips on how to scale a large codebase.

Hey Everyone!

Today we’ll be talking about

How LinkedIn scaled their Restrictions and Enforcement System to 5 million queries per second

Introduction to BitSets and their use at LinkedIn

How Bloom Filters work and their use-cases

Full Refresh-ahead Caching and the pros/cons

The Architecture of LinkedIn’s System

Tech Snippets

Latency Numbers Every Programmer Should Know (Visualized)

Serving a Billion Web Requests with Boring Code

Lies we tell ourselves to keep using Golang

7 questions I get asked frequently as an EM

How to Scale a Large Codebase

The Architecture of LinkedIn’s Restriction Enforcement System

LinkedIn is the largest professional social network in the world, with over 1 billion users. Over 100 million messages are sent daily on the platform.

With this scale, you’ll inevitably have some bad actors causing issues on the site. It might be users sending harassing/toxic messages, spammers posting about some cryptocurrency or LinkedIn influencers sharing how their camping trip made them better at B2B sales.

To combat this malicious behavior, LinkedIn provides a number of safeguards like reporting inappropriate content and blocking problematic/annoying users.

However, implementing these safeguards at LinkedIn’s scale introduces a ton of technical challenges.

Some of the requirements LinkedIn needs for their Restrictions Enforcement system include:

High QPS - The system needs to support 4-5 million queries per second. Many user actions (viewing the feed, sending messages, etc.) require checking the restricted/blocked accounts list.

Low Latency - Latency needs to be under 5 milliseconds. Otherwise, basic actions like refreshing your feed or sending a message would take too long.

High Availability - This system needs to operate with 99.999% availability (5 9s of availability), so less than 30 seconds of downtime per month.

Low Ingestion Delay - When a user blocks/reports an account, that should be reflected in the restrictions enforcement system immediately. If they refresh their feed right after, the posts from the blocked user should be immediately hidden.

Earlier this year, LinkedIn’s engineering team published a fantastic blog post detailing how they built their restrictions enforcement system. They talked about the different generations of the system’s architecture and problems they faced along the way.

In our Quastor article, we’ll focus on the specific data structures and strategies LinkedIn used. We’ll explain them and delve into the pros and cons LinkedIn saw.

BitSets

One of the key data structures LinkedIn uses in their restrictions system is BitSets.

A BitSet is an array of boolean values where each value only takes up 1 bit of space. A bit that’s set represents a true value whereas a bit that’s not set represents false.

BitSets are extremely memory efficient. If you need to store boolean values for 1 billion users (whether a user is restricted/not restricted), you would only need 1 billion bits (approximately 125 megabytes).

To give a more concrete example of how LinkedIn uses BitSets, let’s say LinkedIn needs to store restricted/unrestricted account status for 1 billion users. Each user has a memberID from 1 to 1 billion.

To store this, they could use an array of 64-bit integers. Each integer can store the restriction status for 64 different users (we need 1 bit per user) so the array would hold ~15 million integers (around 125 megabytes of space).

If LinkedIn needs to check whether user with memberID 525234320 is restricted, the steps would be:

Divide 523,234,320 by 64 to get the index in the integer array (which would be 8,175,536)

Take 523,234,320 modulo 64 to get which bit to check in that integer (which would be 32)

Use bitwise operations to check if that specific bit is set to 1 (restricted) or 0 (not restricted)

The time and space requirements with BitSets are very efficient. Checking whether users are restricted takes constant time (since the membership lookup operations are all O(1)) and the storage necessary is only a couple hundred megabytes.

Bloom Filters

In addition to BitSets, the other data structure LinkedIn found useful was Bloom Filters.

A Bloom filter is a probabilistic data structure that lets you quickly test whether an item might be in a set. Bloom Filters are probabilistic so they will tell you if an item is in a set but will occasionally give false positives (it will mistakenly say an item is in the set when it’s not).

Under the hood, Bloom Filters use hashing to map items to a bit array. The issue is that collisions in the hashing function can cause false positives. Here’s a fantastic article that delves into how Bloom Filters work with visuals and a basic Python implementation.

For LinkedIn, they also used Bloom Filters to quickly check whether a user’s account was restricted.

The pros were that the Bloom Filters were extremely space efficient compared to traditional caching techniques (using a set or hash table).

The downside was the false positives. However, the Bloom Filter can be tuned to make false positives extremely rare and LinkedIn didn’t find it to be a big issue.

Full Refresh-ahead Caching

LinkedIn explored various caching strategies to achieve their QPS and latency requirements. One approach was their full refresh-ahead cache.

The dataset of account restrictions was quite small (thanks to using the BitSet and Bloom Filter data structures) so LinkedIn had each client application host store all restriction data in their in-memory cache.

In order to maintain cache freshness, they implemented a polling mechanism where clients would periodically check for any new changes to member restrictions.

This system resulted in a huge decrease in latencies but came with some downsides.

The client-side memory footprint ended up being substantial and strained infrastructure. Additionally, the caches were stored in-memory so they weren’t persistent. Clients had to frequently build and rebuild this cache which put strain on LinkedIn’s underlying database.

System Architecture

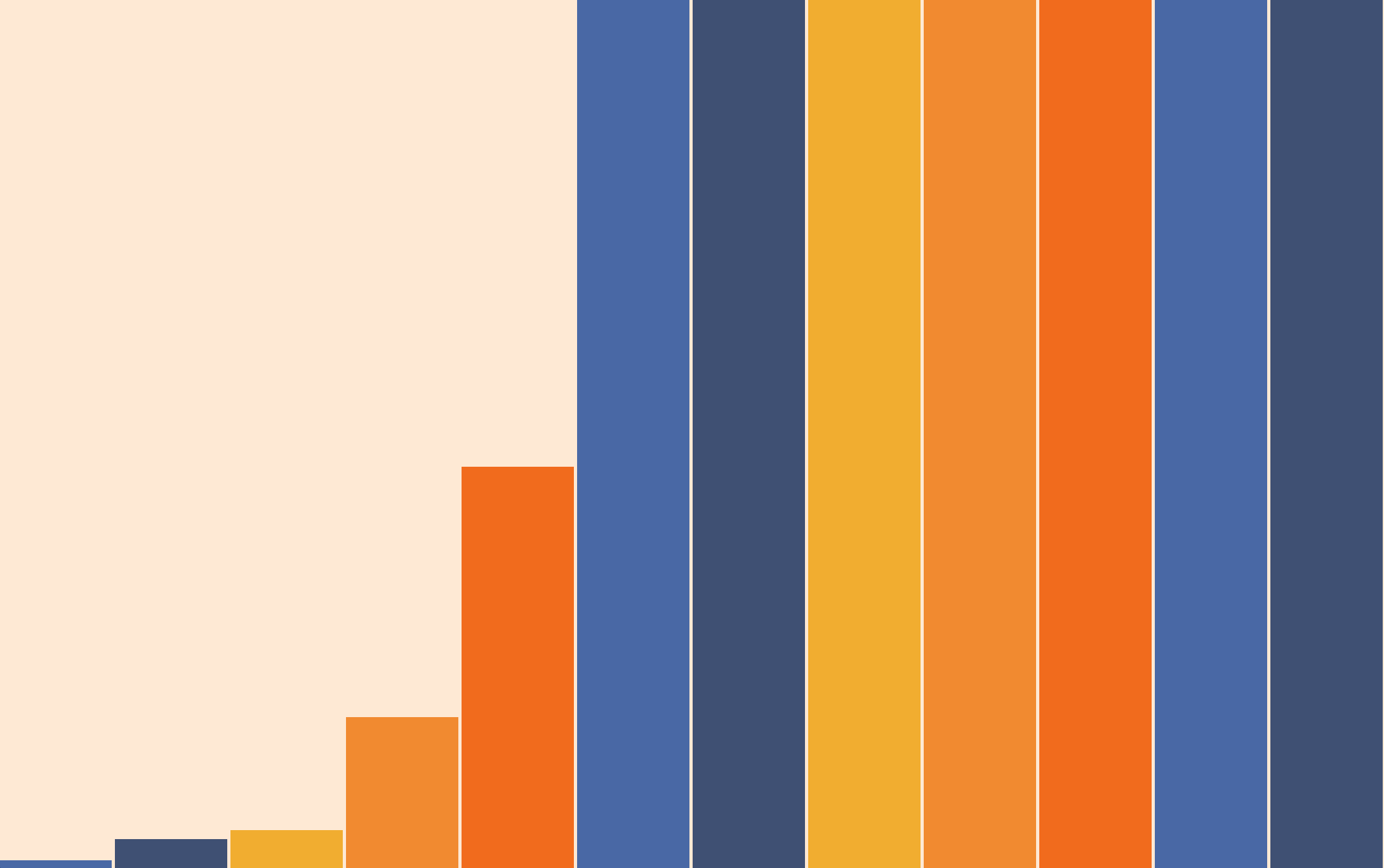

Here’s the current architecture for LinkedIn’s restriction enforcement system.

The key components are

Client Layer - Clients that use this system include the LinkedIn newsfeed, their recruiting/talent management tools and other products/services at the company. These clients use a REST API to query the system.

LinkedIn Restrictions Enforcement System - This component consists of the BitSet data structures and the main restriction enforcement system. The BitSet data structures are stored client-side and maintain a cache of all the restriction records.

Venice Database - this is the central storage (source of truth) for all user restrictions. Venice is an open-source, horizontally scalable, eventually-consistent storage system that LinkedIn built on RocksDB. You can read more about how Venice works here.

Kafka Restriction Records - When a user gets reported/blocked, the change in their account status is sent through Kafka. This allows near real-time propagation of changes.

Restriction Management System - LinkedIn has a legacy system (check the blog post for a full explanation on the previous generations) of the Restriction Enforcement system that connects to Espresso to store and update blocked/restricted users. Espresso is LinkedIn’s distributed, document data store that’s built on MySQL. You can read more about Espresso here.