How Google stores Exabytes of Data

A look into Google Colossus, a massive distributed file system that stores exabytes of data. Plus, when is short tenure a red flag? How to manage technical quality in a codebase and more.

Hey Everyone!

Today we’ll be talking about

How Google stores Exabytes of Data

Colossus is Google’s Distributed File System and it can scale to tens of thousands of machines and exabytes of storage.

It’s the next generation of Google File System, a distributed file system built in the early 2000s that inspired Hadoop Distributed File System

We’ll talk about some history of Google File System and MapReduce and then go into how Colossus works

Tech Snippets

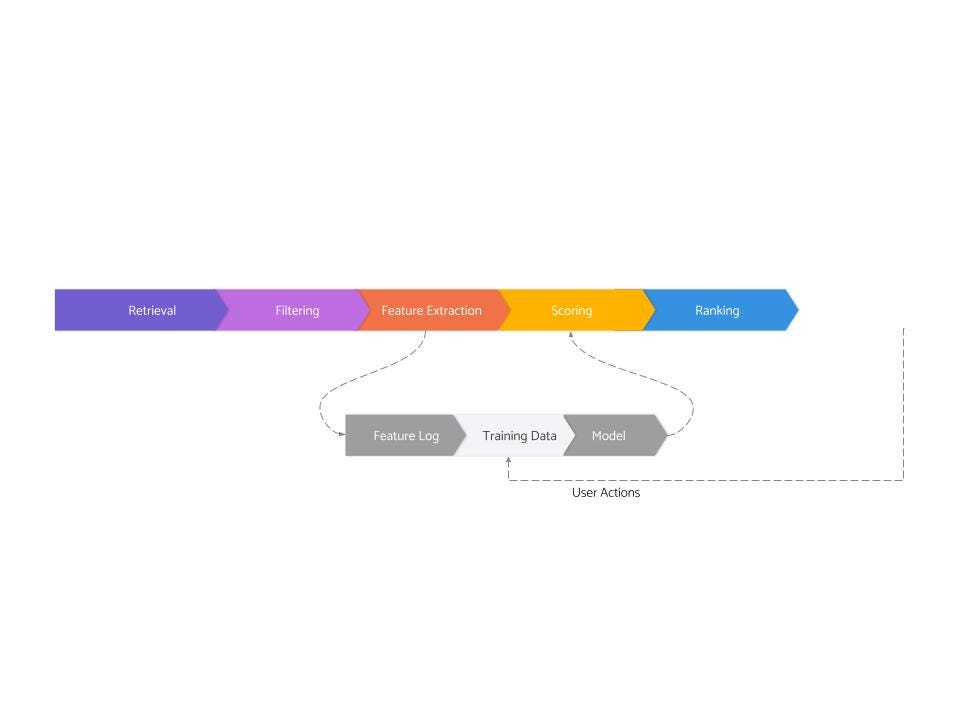

Real World Recommendation Systems

Managing Technical Quality in a Codebase

How Dropbox Rewrote Their Web Serving Stack

AWS Builder’s Library - How to Instrument Your System for Visibility

When Is Short Tenure a Red Flag?

How Google Stores Exabytes of Data

Google Colossus is a massive distributed storage system that Google uses to store and manage exabytes of data (1 exabyte is 1 million terabytes). Colossus is the next generation of Google File System (GFS), which was first introduced in 2003.

In the early 2000s, Sanjay Ghemawat, Jeff Dean and other Google engineers published two landmark papers in distributed systems: the Google File System paper and the MapReduce paper.

GFS was a distributed file system that could scale to over a thousand nodes and hundreds of terabytes of storage. The system consisted of 3 main components: a master node, Chunk Servers and clients.

The master is responsible for managing the metadata of all files stored in GFS. It would maintain information about the location and status of the files. The Chunk Servers would store the actual data. Each managed a portion of data stored in GFS, and they would replicate the data to other chunk servers to ensure fault tolerance. Then, the GFS client library could be run on other machines that wanted to create/read/delete files from a GFS cluster.

To make the system scalable, Google engineers minimized the number of operations that the master would have to do. If a client wanted to access a file, they would send a query to the master. The master would send the chunk servers that hold the chunks of the file, and the client would download the data from the chunk servers. The master shouldn’t be involved in the minutia of transferring data from chunk servers to clients.

In order to efficiently run computations on the data stored on GFS, Google created MapReduce, a programming model and framework for processing large data sets. It consists of two main functions: the map function and the reduce function.

Map is responsible for processing the input data locally and generating a set of intermediate key-value pairs. The reduce function will then process these intermediate key-value pairs in parallel and combine them to generate the final output. MapReduce is designed to maximize parallel processing while minimizing the amount of network bandwidth needed (run computations locally when possible).

For more details, we wrote past deep dives on MapReduce and Google File System.

These technologies were instrumental in scaling Google and they were also reimplemented by engineers at Yahoo. In 2006, the Yahoo projects were open sourced and became the Apache Hadoop project. Since then, Hadoop has exploded into a massive ecosystem of data engineering projects.

One issue with Google File System was the decision to have a single master node for storing and managing the GFS cluster’s metadata. You can read Section 2.4 Single Master in the GFS paper for an explanation of why Google made the decision of having a single master.

This choice worked well for batch-oriented applications like web crawling and indexing websites. However, it could not meet the latency requirements for applications like YouTube, where you need to serve a video extremely quickly.

Having the master node go down meant the cluster would be unavailable for a couple of seconds (during the automatic failover). This was no bueno for low latency applications.

To deal with this issue (and add other improvements), Google created Colossus, the successor to Google File System.

Dean Hildebrand is a Technical Director at Google and Denis Serenyi is a Tech Lead on the Google Cloud Storage team. They posted a great talk on YouTube delving into Google’s infrastructure and how Colossus works.

Infrastructure at Google

The Google Cloud Platform and all of Google’s products (Search, YouTube, Gmail, etc.) are powered by the same underlying infrastructure.

It consists of three core building blocks

Borg - A cluster management system that serves as the basis for Kubernetes. It launches and manages compute services at Google. Borg runs hundreds of thousands of jobs across many clusters (with each having thousands of machines). For more details, Google published a paper talking about how Borg works.

Spanner - A highly scalable, consistent relational database with support for distributed transactions. Under the hood, Spanner stores its data on Google Colossus. It uses TrueTime, Google’s clock synchronization service to provide ordering and consistency guarantees. For more details, check out the Spanner paper.

Colossus - Google’s successor to Google File System. This is a distributed file system that stores exabytes of data with low latency and high reliability. For more details on how it works, keep reading.

How Colossus Works

Similar to Google File System, Colossus consists of three components

Client Library

Control Plane

D Servers

Applications that need to store/retrieve data on Colossus will do so with the client library, which abstracts away all the communication the app needs to do with the Control Plane and D servers. Users of Colossus can select different service tiers based on their latency/availability/reliability requirements. They can also choose their own data encoding based on the performance cost trade-offs they need to make.

The Colossus Control Plane is the biggest improvement compared to Google File System, and it consists of Curators and Custodians.

Curators replace the functionality of the master node, removing the single point of failure. When a client needs a certain file, it will query a curator node. The curator will respond with the locations of the various data servers that are holding that file. The client can then query the data servers directly to download the file.

When creating files, the client can send a request to the Curator node to obtain a lease. The curator node will create the new file on the D servers and send the locations of the servers back to the client. The client can then write directly to the D servers and release the lease when it’s done.

Curators store file system metadata in Google BigTable, a NoSQL database. Because of the distributed nature of the Control Plane, Colossus can now scale up by over 100x the largest Google File System clusters, while delivering lower latency.

Custodians in Colossus are background storage managers, and they handle things like disk space rebalancing, switching data between hot and cold storage, RAID reconstruction, failover and more.

D servers refers to the Chunk Servers in Google File System, and they’re just network attached disks. They store all the data that’s being held in Colossus. As mentioned previously, data flows directly from the D servers to the clients, to minimize the involvement of the control plane. This makes it much easier to scale the amount of data stored in Colossus without having the control plane as a bottleneck.

Colossus Abstractions

Colossus abstracts away a lot of the work you have to do in managing data.

Hardware Diversity

Engineers want Colossus to provide the best performance at the cheapest cost. Data in the distributed file system is stored on a mixture of flash and disk. Figuring out the optimal amount of flash memory vs disk space and how to distribute data can mean the difference of tens of millions of dollars. To handle intelligent disk management, engineers looked at how data was accessed in the past.

Newly written data tends to be hotter, so it’s stored in flash memory. Old analytics data tends to be cold, so that’s stored in cheaper disk. Certain data will always be read at specific time intervals, so it’s automatically transferred over to memory so that latency will be low.

Clients don’t have to think about any of this. Colossus manages it for them.

Requirements

As mentioned, apps have different requirements around consistency, latency, availability, etc.

Colossus provides different service tiers so that applications can choose what they want.

Fault Tolerance

At Google’s scale, machines are failing all the time (it’s inevitable when you have millions of machines).

Colossus steps in to handle things like replicating data across D servers, background recovery and steering reads/writes around failed nodes.

For more details, you can read the full talk here.

How did you like this summary?Your feedback really helps me improve curation for future emails. |