How Figma Scaled their Databases by 100x

From 2020 to 2024, Figma's database stack has grown by 100x. We'll talk about how they did this. Plus, lessons learned from two decades of SRE at Google, how to avoid wasting your career and more.

Hey Everyone!

Today we’ll be talking about

How Figma Scaled their Database Stack

Scaling with Caching, Read Replicas and Vertical Partitioning

Vertical Partitioning vs. Horizontal Partitioning

Considering NewSQL providers for sharding

Selecting the right shard key and sharding logically vs. physically

Tips on Negotiating Compensation for Software Engineers

Steve Huynh is a Principal Engineer at Amazon and he made a great video with tips on negotiating compensation

Rather than just asking for more money, you can start the conversation by discussing expectations and outcomes for the role

Understand what are your alternatives and what are the company’s alternatives. Many recruiters may try to create a false “sense of scarcity“ around the offer.

Over-communicate with your managers. Send a weekly state of me email to your manager and skip-manager discussing your accomplishments and priorities.

Tech Snippets

How to waste your career, one comfortable year at a time

Lessons learned from two decades of Site Reliability Engineering at Google

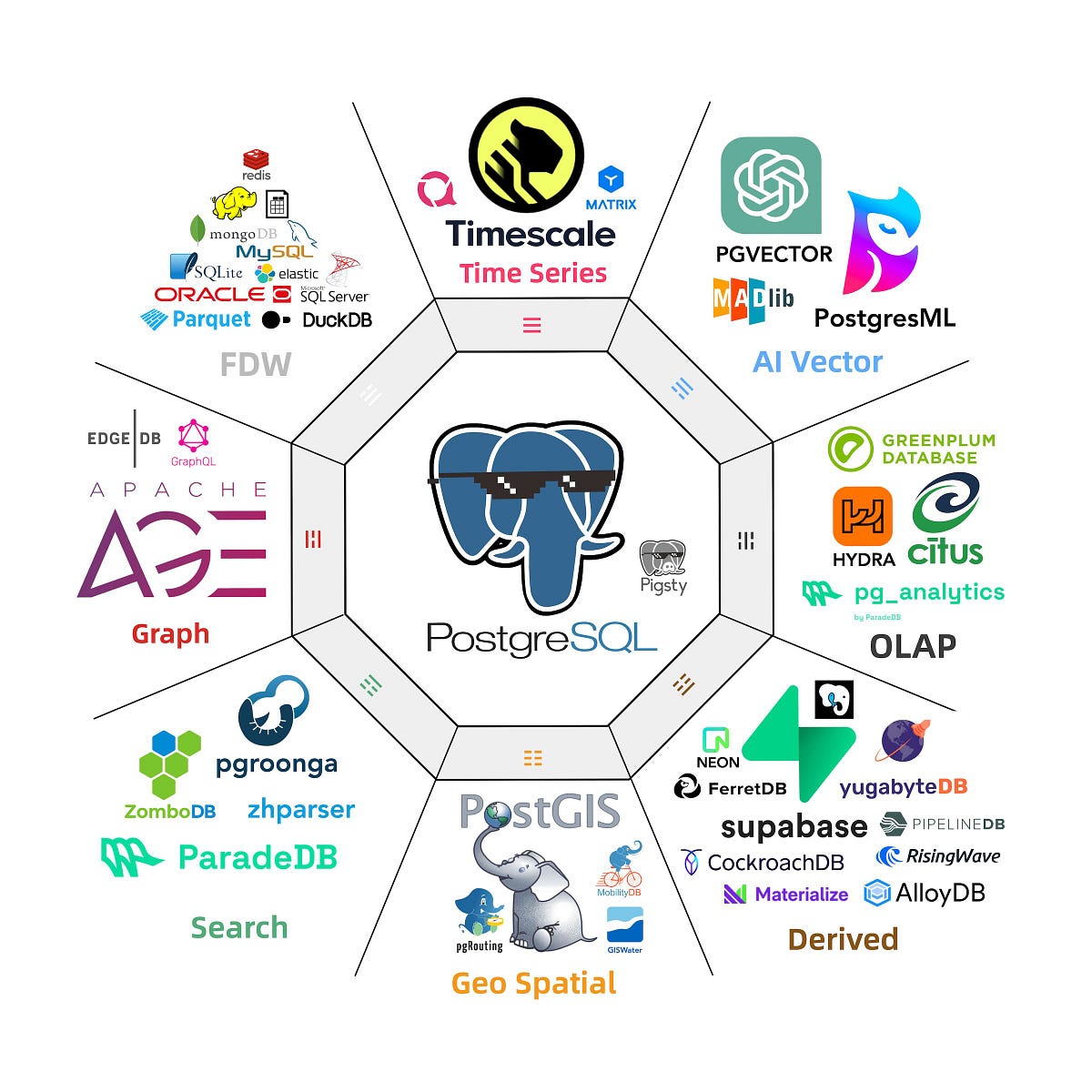

How Postgres is Eating the Database World

Vision Pro is an Over-Engineered “DevKit”

How Figma Scaled their Database Stack

Figma is a web application for designers that lets you create complex UIs and mockups. The app has a focus on real-time collaboration, so multiple designers can edit the same UI mockup simultaneously, through their web browser.

Over the past few years, Figma has been experiencing an insane amount of growth and has scaled to millions of users (getting the company significant interest from incumbent players like Adobe).

The good news is that this means revenue is rapidly increasing, so the employees can all get rich when Adobe comes to buy the startup for $20 billion.

The bad news is that Figma’s engineers have to figure out how to handle all the new users. (the other bad news is that some European regulators might come in and cancel the acquisition deal… but let’s just stick to the engineering problems)

Since Figma’s hyper-growth started, one core component they’ve had to scale massively is their data storage layer (they use Postgres).

In fact, Figma’s database stack has grown almost 100x since 2020. As you can imagine, scaling at this rate requires a ton of ingenuity and engineering effort.

Sammy Steele is a Senior Staff Software Engineer at Figma and she wrote a really fantastic blog post on how her team was able to accomplish this.

We’ll be summarizing the article with some added context.

In today’s newsletter, we’ll discuss a ton of concepts on vertical/horizontal scaling, NewSQL databases, selecting shard keys and more.

If you’d like to remember these concepts, check out Quastor Pro. You’ll get spaced-repetition flashcards on all the core topics we discuss in Quastor.

First Steps for Scaling

In 2020, Figma was running a single Postgres database hosted on the largest instance size offered by AWS RDS. As the company continued their “hypergrowth” trajectory, they quickly ran into scaling pains.

Their first steps for scaling Postgres were

Caching - With caching, you add a separate layer (Redis and Memcached are popular options) that stores frequently accessed data. When you need to query data, you first check the cache for the value. If it’s not in the cache, then you check the Postgres database.

There’s different patterns for implementing caching like Cache-Aside, Read-Through, Write-Through, Write-Behind and more. Each comes with their own tradeoffs.Read Replicas - With read replicas, you take your Postgres database (the primary) and create multiple copies of it (the replicas). Read requests will get routed to the replicas whereas any write requests will be routed to the primary. You’ll can use something like Postgres Streaming Replication to keep the read replicas synchronized with the primary.

One thing you’ll have to deal with is replication lag between the primary and replica databases. If you have certain read requests that require up-to-date data, then you might configure those reads to be served by the primary.Separating Tables - Your relational database will consist of multiple tables. In order to scale, you might separate the tables and store them on multiple database machines.

For example, your database might have the tables:user,productandorder. If your database can’t handle all the load, then you might take theordertable and put that on a separate database machine.

Figma first split up their tables into groups of related tables. Then, they moved each group onto its own database machine.

After Figma exhausted these three options, they were still facing scaling pains. They had tables that were growing far too large for a single database machine.

These tables had billions of rows and were starting to cause issues with running Postgres vacuums (we talked about Postgres vacuums and the TXID wrap around issue in depth in the Postgres Tech Dive)

The only option was to split these up into smaller tables and then store those on separate database machines.

Database Partitioning

When you’re partitioning a database table, there’s two main options: vertical partitioning and horizontal partitioning.

Vertical partitioning is where you split up the table by columns. If you have a Users table with columns for country, last_login, date_of_birth, then you can split up that table into two different tables: one that contains last_login and another that contains country and date_of_birth. Then, you can store these two tables on different machines.

Horizontal partitioning is where you divide a table by its rows. Going back to the Users table example, you might break the table up using the data in the country column. All rows with a country value of “United States” might go in one table, rows with a country value of “Canada” go in another table and so on.

Figma implemented horizontal partitioning.

Challenges of Horizontal Partitioning

Splitting up your tables and storing them on separate machines adds a ton of complexity.

Some of the difficulties you’ll have to deal with include

Inefficient Queries - many of the queries you were previously running may now require visiting multiple database shards. Querying all the different shards adds a lot more latency due to all the extra network requests.

Code Rewrites - application code must be updated so that it correctly routes queries to the correct shard.

Schema Changes - whenever you want to change a table schema, you’ll have to coordinate these changes across all your shards.

Implementing Transactions - transactions might now span multiple shards so you can’t rely on Postgres’ native features for enforcing ACID transactions. If you want atomicity, then you’ll have to write code to make sure that all the different shards will either successfully commit/rollback the changes.

Figma tested for these issues by splitting their sharding process into logical and physical sharding (we’ll describe this in a bit).

Implementation of Horizontal Partitioning

One of the first considerations was whether Figma should implement horizontal partitioning themselves or look to an outside provider.

Build vs. Buy

NewSQL has been a big buzzword in the database world over the last decade. It’s where database providers handle sharding for you. You get infinite scalability and you also get ACID guarantees.

Examples of NewSQL databases include Vitess (managed sharding for MySQL), Citus (managed sharding for Postgres), Google Spanner, CockroachDB, TiDB and more.

Before implementing their own sharding solution, the Figma team first looked at some of these services. However, the team decided they’d rather manage the sharding themselves and avoid the hassle of switching to a new database provider.

Switching to a managed service would require a complex data migration and have a ton of risk. Over the years, Figma had developed a ton of expertise on performantly running AWS RDS Postgres. They’d have to rebuild that knowledge from scratch if they switched to an entirely new provider.

Additionally, they needed a new solution as soon as possible due to their rapid growth. Transitioning to a new provider would require months of evaluation, testing and rewrites. Figma didn’t have enough time for this.

Instead, they decided to implement partitioning on top of their AWS RDS Postgres infrastructure.

Their goal was to tailor the horizontal sharding implementation to Figma’s specific architecture, so they didn’t have to reimplement all the functionality that NewSQL database providers offer. They wanted to just build the minimal feature set so they could quickly scale their database system to handle the growth.

We’ll now delve into the process Figma went through for sharding and some of the important factors they dealt with.

Selecting the Shard Key

Remember that horizontal sharding is where you split up the rows in your table based on the value in a certain column. If you have a table of all your users, then you might use the value in the country column to decide how to split up the data. Another option is to take a column like user_id and hash it to determine the shard (all users whose ID hashes to a certain value go to the same shard).

The column that determines how the data is distributed is called the shard key and it’s one of the most important decisions you need to make. If you do a poor job, one of the issues you might end up with is hot/cold shards, where certain database shards get far more reads/writes than other shards.

At Figma, they initially picked columns like the UserID, FileID and OrgID as the sharding key. However, many of the keys they picked used auto-incrementing or Snowflake timestamp-prefixed IDs. This resulted in significant hotspots where a single shard contained the majority of their data.

To solve this, they hashed the sharding keys and used the output for routing. As long as they picked a good hash function with uniformity (the output is uniformly distributed) then that would avoid hot/cold shards.

Making Incremental Progress with Logical Sharding

One of Figma’s goals with the sharding process was incremental progress. They wanted to take small steps and have the option to quickly roll back if there were any errors.

They did this by splitting up the sharding task into logical sharding and physical sharding.

Logical sharding is where the rows in the table are still stored on the same database machine but they’re organized and accessed as if they were on separate machines. The Figma team was able to do this with Postgres views. They could then test their system and make sure everything was working.

Physical sharding is where you do the actual data transfers. You split up the tables and move them onto separate machines. After extensive testing on production traffic, the Figma team proceeded to physically move the data. They copied the logical shards from the single database over to different machines and re-routed traffic to go to the various databases.

Results

Figma shipped their first horizontally sharded table in September 2023. They did it with only 10 seconds of partial availability on database primaries and no availability impact on the read replicas. After sharding, they saw no regressions in latency or availability.

They’re now working on tackling the rest of their high-write load databases and sharding those onto multiple machines.

Tech Snippets

Subscribe to Quastor Pro for long-form articles on concepts in system design and backend engineering.

Past article content includes

System Design Concepts

| Tech Dives

|

When you subscribe, you’ll also get Spaced Repetition (Anki) Flashcards for reviewing all the main concepts discussed in prior Quastor articles

Tips on Negotiating Compensation

Steve Huynh is a Principal Engineer at Amazon and he runs an awesome YouTube channel called A Life Engineered.

Recently, he published a fantastic video delving into tips on negotiating compensation where he talked with Brian Liu. Brian runs a startup that negotiates compensation on behalf of software engineers.

Here’s a summary of the main takeaways from the video.

Focus on how you can create value for the company

Many people think of negotiating as just telling your recruiter “I have another offer that is paying me $xyz more, so plz match“.

Instead, Brian suggests you start by creating a document that outlines expectations and outcomes for the role. The goal is to do this when you get the offer and to do it with your potential manager.

This aligns expectations and shows your manager that you’re focused on meeting their needs. After doing this, it’s easier to ask for the extra compensation you’re looking for.

Brian talks about how he had a client who was originally given an L62 offer at Microsoft (mid-level engineer offer).

Rather than trying to negotiate with the recruiter, the engineer talked to his future manager and discussed expectations and deliverables for the role. He was able to make a case for L63 (senior software engineer) impact and offered to do additional interviews to prove he was capable.

The entire process took an additional month but he was able to get his level and compensation increased to L63, which accelerated his career by 1-2 years.

Understand BATNA

BATNA stands for Best Alternative to a Negotiated Agreement. It just means your alternative if the current offer doesn’t work out.

The best negotiators will obviously work to strengthen their own BATNA (get other high-paying offers) but they’ll also understand the target company’s BATNA. What other options does the company have if you reject the offer?

Many recruiters are trained to create as much “scarcity” as possible around the offer. They might tell you the offer won’t last, hint at imminent rounds of fundraising which could dilute your equity, etc.

Instead, you should try to have an abundance mindset. Understand exactly how much time you have to look for an offer (what is your financial status) and don’t just settle for an offer where you’re down-leveled/underpaid out of fear.

Of course, if you’re in a precarious financial situation then perhaps you should just take the offer, but then it might make sense to stay on the market and continue looking if you’re severely underpaid/under-leveled.

Your work will not speak for itself

This is advice geared towards being on the job. You have to understand that solely doing good work is not enough. You need to have a strategy for how you communicate your accomplishments with your manager.

It’s impossible for your manager/skip-manager to have a total understanding of what you’re doing all-day. They obviously have their own tasks and priorities.

Instead, Brian advocates sending your manager and skip-manager a weekly state of me email.

In this, you discuss

What have you accomplished over the past week

Where are you currently blocked

What are your priorities for the next week

Doing this will help you stay on the radar of the people who decide your future promotions and compensation.

Also, you should make sure you’re creating a brag document. Make sure that your manager knows what you’ve achieved over the last quarter.

However, don’t just summarize your work in the brag document.

Instead, you should distill

What do your manager care about?

What does your skip manager care about?

Find the most critical accomplishments you did that your manager cares the most about and ensure that it’s communicated.

For more details, watch the full video here.