How Booking.com Processes Millions of Events Every Second

We'll talk about Events and the Three Pillars of Observability. Plus, how to crush your job onboarding, how to improve your team's documentation and more.

Hey Everyone!

Today, we’ll be talking about

How Booking.com does Observability

Brief Introduction to the Three Pillars of Observability (Metrics, Logs, Traces)

Why Booking Relies on Events (Key-Value Pairs) and How They Structure Them

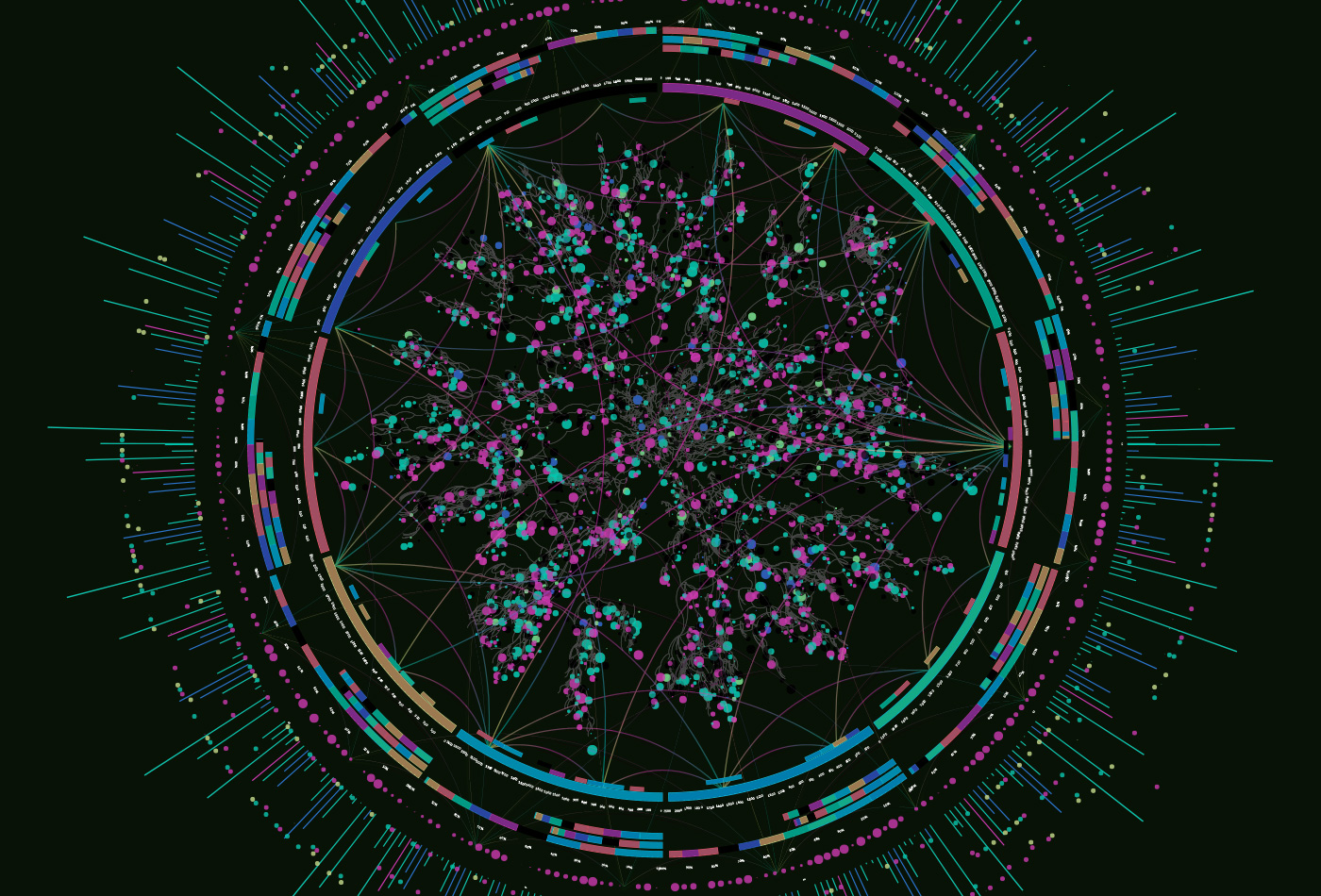

The Architecture of Booking’s Event System

Issues Booking has had with Events and Why They’re Switching to OTel

Tech Snippets

7 Tips to Crush Your Job Onboarding from an Apple Staff Engineer

Test Features, Not Code

What We Learned from a Year of Building with LLMs

Prioritize Documentation over Meetings

How Booking.com Processes Millions of Events Every Second

Booking.com is one of the largest online travel agencies in the world. Every month, hundreds of millions of users visit their platform to search for hotels, flights, resorts and more.

For their backend, the company uses a service-oriented architecture running on a hybrid-cloud setup (a mix of on-prem data centers and public cloud services).

At their size, observability is crucial for debugging any issues, scaling the system, recovering from outages, etc.

With observability, you’ll frequently see “three pillars“ mentioned: metrics, logs and traces. (we’ll delve into each of these in case you’re unfamiliar)

However, Booking takes a different approach. They rely on events (key-value pairs) to capture detailed, contextual information from the entire cycle of any “operation” (this can be an HTTP request, cron job, background job, etc.)

Booking will then run analytics on these events. They’ll also use the events to generate metrics, logs and traces.

The engineering team has scaled this observability system to the point where they handle tens of millions of events every second. Earlier this month, the Booking team published a great blog post delving into how this works.

In today’s article, we’ll first go over the three pillars of observability. Then, we’ll talk about events and how Booking uses them.

Three Pillars of Observability

Logs, metrics and traces are commonly referred to as the “Three Pillars of Observability”. We’ll quickly break down each one.

Logs

Logs are timestamped records of discrete events/messages that are generated by the various applications, services and components in your system.

They’re meant to provide a qualitative view of the backend’s behavior and can typically be split into

Application logs - Messages logged by your server code, databases, etc.

System logs - Generated by systems-level components like the operating system, disk errors, hardware devices, etc.

Network logs - Generated by routers, load balancers, firewalls, etc. They provide information about network activity like packet drops, connection status, traffic flow and more.

Logs are commonly in plaintext, but they can also be in a structured format like JSON. They’ll be stored in a database like Elasticsearch.

The issue with just using logs is that they can be extremely noisy and it’s hard to extrapolate any higher-level meaning of the state of your system out of the logs.

Incorporating metrics into your toolkit helps you solve this.

Metrics

Metrics provide a quantitative view of your backend around factors like response times, error rates, throughput, resource utilization and more. They’re commonly stored in a time series database.

They’re amenable to statistical modeling and prediction. Calculating averages, percentiles, correlation and more with metrics makes them particularly useful for understanding the behavior of your system over some period of time.

The shortcoming of both metrics and logs is that they’re scoped to an individual component/system. If you want to figure out what happened across your entire system during the lifetime of a request that traversed multiple services/components, you’ll have to do some additional work (joining together logs/metrics from multiple components).

This is where Distributed tracing comes in.

Tracing

Distributed traces allow you to track and understand how a single request flows through multiple components/services in your system.

To implement this, you identify specific points in your backend where you have some fork in execution flow or a hop across network/process boundaries. This can be a call to another microservice, a database query, a cache lookup, etc.

Then, you assign each request that comes into your system a UUID (unique ID) so you can keep track of it. You add instrumentation to each of these specific points in your backend so you can track when the request enters/leaves (the OpenTelemetry project calls this Context Propagation).

This entire process creates a trace.

With Distributed tracing, you have spans and traces. Traces represent the complete workflow of a transaction across multiple services and they consist of multiple spans. A span is just a single operation.

You can analyze this data with an open-source system like Jaeger or Zipkin.

If you’d like to learn more about implementing traces, you can read about how DoorDash used OpenTelemetry here.

How Booking uses Events

Rather than relying on metrics, logs and traces, Booking uses events as the foundation for their observability system.

An event is a key-value data structure that contains information about things like

Performance - duration of the request, latency, etc.

Errors - any warnings/errors generated

Services - which services were involved in the processing

And more.

Snippet of Sample Event

{

"availability_zone" : "london",

"created_epoch": "1660548925.3674",

"Service_name": "service A",

"git_commit_sha": "..",

…

}Events can be generated for any “unit of work” like an HTTP request, cron job, background job, etc.

The event data is later used to generate data around metrics, logs and traces. Booking will also run analytics queries on the event data.

Benefits of Event Data

Some of the benefits Booking sees from events include

Richer context - Events have full context about the runtime environment(s), services involved, performance statistics and more. This is all aggregated into a single data structure so it’s easier to manage.

Improved debugging - Using events has helped the Booking team uncover many questions around the “unknown-unknowns“ that come up frequently in distributed systems. They can use events to see which users are being affected, all the services involved, any feature flags, A/B tests and more.

Cross-component Analysis - Events can be configured to give a more holistic view than metrics/logs. They can track state across multiple components of the Booking backend.

Implementing Events at Booking

Booking’s implementation of events is entirely proprietary (but they want to change this).

They have a custom events library that they use to send events to an event-proxy daemon. This daemon runs on all the machines in their fleet.

The daemon handles tasks like enriching events with metadata, routing events to different Kafka topics and more.

After the event-proxy daemon routes the event to Kafka, several different consumers consume the data.

Consumers include

Distributed tracing consumer - converts the event data into spans and traces. Booking works with Honeycomb for managing distributed tracing, so they upload their spans there.

APM generator - generates application performance monitoring (APM) metrics around things like the number of requests, latencies, etc. Booking stores this data in Graphite.

Failed event processor - this consumer looks for Events with error messages and writes them to Elasticsearch. Developers at the company can then use this for debugging.

And more.

Challenges with Events at Booking

The main challenges that Booking faces with events are due to the fact that their implementation is proprietary to the company.

Booking needs to maintain the event libraries for code instrumentation. If they want to integrate the data with third parties, then they need to write custom conversion code.

To reduce the maintenance burden, Booking is looking to migrate their system from custom events to OpenTelemetry (OTel).

Some of the benefits from OTel include

Language support - OpenTelemetry provides libraries in a wide range of programming languages. This means Booking doesn’t have to maintain their event library code anymore.

Extensibility - OTel is extensible and supports custom instrumentation libraries and additional data types. This helps them integrate their existing internal tools seamlessly.

Wide vendor support - OTel is supported by vendors like DataDog, HoneyComb, GCP, AWS, New Relic and many more. This reduces vendor lock in.