The Architecture of DoorDash's Caching System

DoorDash wrote a great blog post delving into how they implemented caching for their microservices. Plus, why clever code is the worst code you can write and more.

Hey Everyone!

Today we'll be talking about

The Architecture of DoorDash’s Caching System

DoorDash teams were each building their own caching solutions. The teams were running in to the same problems around getting rid of stale data, adding observability and more.

DoorDash built a shared caching library that all the backend teams across the company could use.

We’ll talk about the architecture of this and some features that they built in to this library.

Tips on Mentoring other Software Engineers

One key factor in transitioning from a mid-level dev to a senior-dev is your ability to mentor junior developers

Jordan Cutler wrote a fantastic blog post delving into mentorship and how you can become a better mentor

Help your mentee build a career growth plan

When advising your mentee, don’t just jump to answers and learn how to convey advice

Check in frequently and compliment often

Tech Snippets

Why “Clever Code“ is the worst code you can write

Everything You Need to Know About Micro Frontends

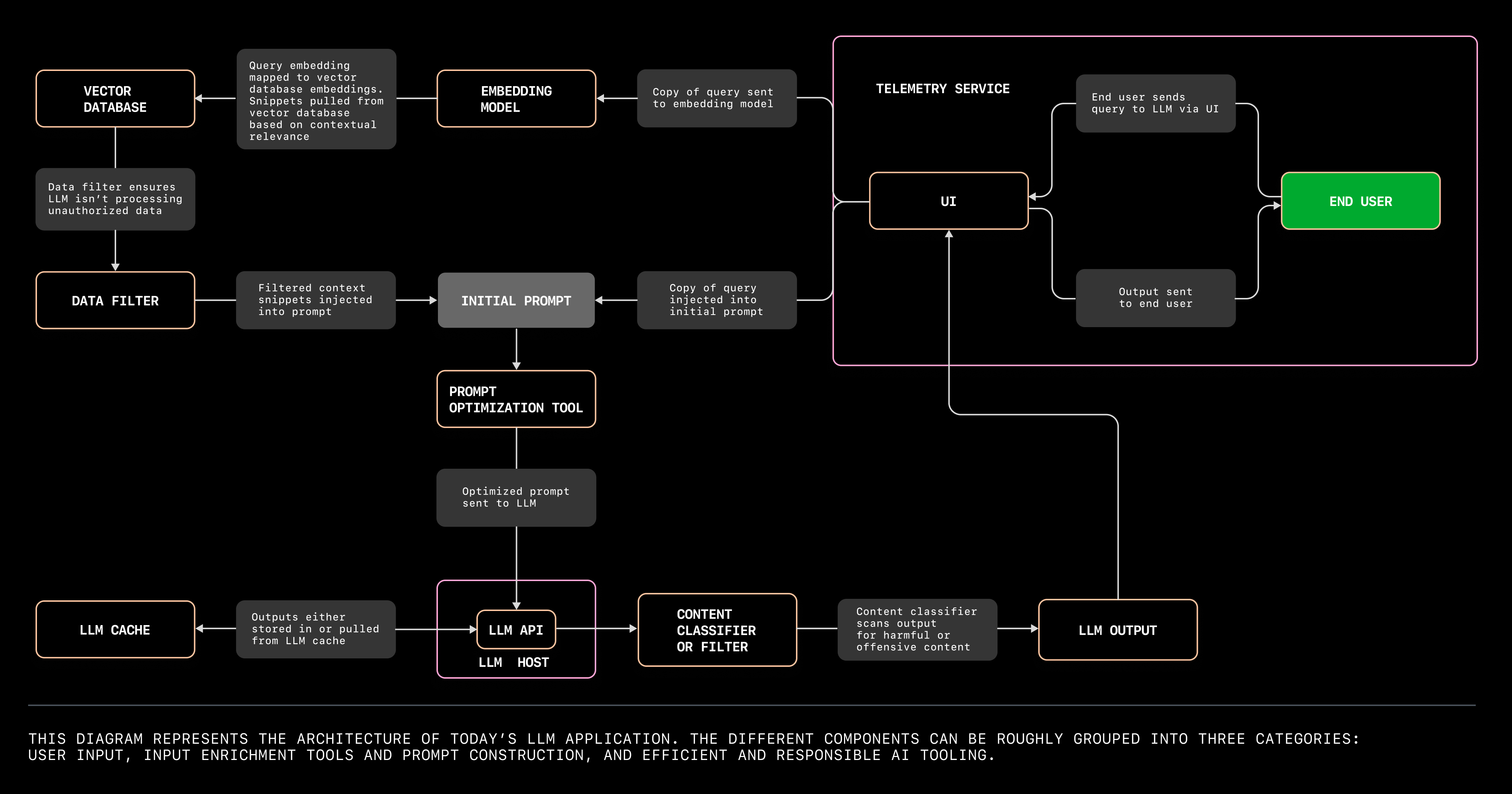

Tips on Building with LLMs from GitHub

The Architecture of Serverless Data Systems

Comparing Humans, GPT-4, and GPT-4 Vision On Abstraction and Reasoning Tasks

The Architecture of DoorDash's Caching System

DoorDash is the largest food delivery app in the US with over 30 million active users and hundreds of thousands of restaurants/stores. In other words, they’re the reason you gained 15 lbs during COVID.

For their backend, DoorDash relies on a microservices architecture where services are written in Kotlin and communicate with gRPC. If you’re curious, you can read about their decision to switch from a Python monolith to microservices here.

As you might’ve guessed, having a bunch of network requests for service-to-service communication isn’t great for minimizing latency. Reducing the number of network requests by optimizing I/O patterns became a priority for the DoorDash team.

The most straightforward way of doing this (without overhauling a bunch of existing code) is to utilize caching. Slap on a caching layer so that you don’t make additional network requests for data you just received.

DoorDash uses Kotlin (and the associated JVM ecosystem) so they were using Caffeine (in-memory Java caching library) for local caching and Redis Lettuce (Redis client for Java) for distributed caching.

The issue they ran into was that teams were each building their own caching solutions. These various teams would then run into the same problems and waste engineering time building their own solutions.

Some of the problems the teams at DoorDash were facing were…

Cache Staleness - Each team had to wrestle with the problem of invalidating cached values and making sure that the cached data didn’t become too outdated.

Lack of Runtime Controls - If there’s issues with the caching layer or parameter tuning (TTL, eviction strategy, etc.) then this might require a new deployment or rollback. This consumes time and development resources.

Inadequate Metrics and Observability - How is the caching layer performing? Is the cache hit rate too low? Is the cached data that’s being returned completely out of date? You need something that provides metrics and observability so you can assess this.

Inconsistent Key Schema - The lack of a standardized approach for cache keys across teams made debugging harder.

Teams at DoorDash were each individually figuring out these issues for their custom-built caching layers. This was a big waste of engineering time (we might not be in the 2021 job market anymore but developer time is still expensive).

Therefore, DoorDash decided to create a shared caching library that all teams could use.

They decided to start with a pilot program where they picked a single service (DashPass) and would build a caching solution for that. They’d battle-test & iterate on it and then give it to the other teams to use.

Here’s some features of the new caching library.

Layered Caching

A common strategy when building a caching system is to build it in layers. For example, you’re probably familiar with how your CPU does this with L1, L2, L3 cache. Each layer has trade-offs in terms of speed/space available.

DoorDash used this same approach where a request would progress through different layers of cache until it’s either a hit (the cached value is found) or a miss (value wasn’t in any of the cache layers). If it’s a miss, then the request goes to the source of truth (which could be another backend service, relational database, third party API, etc.).

The team implemented three layers in their caching system

Request Local Cache - this just lives for the lifetime of the request and uses Kotlin’s HashMap data structure.

Local Cache - this is visible to all workers within a single Java virtual machine. It’s built using Java’s Caffeine caching library.

Redis Cache - this is visible to all pods that share the same Redis cluster. It’s implemented with Lettuce, a Redis client for Java.

from DoorDash’s engineering blog

Runtime Feature Flags

Different teams will need various customizations around the caching layer. To make this as seamless as possible, DoorDash added runtime control where teams could change configurations seamlessly.

Tuning options include

Kill Switch - If something goes wrong with the caching layer, then you should be able to shut it off ASAP.

Change TTL - To remove stale data from the cache, DoorDash uses a TTL. When the TTL expires, the cache will evict that key/value pair. This TTL is configurable since data that doesn’t change often will need a higher TTL value.

Shadow Mode - Shadowing certain requests is a good way to ensure your caching layer is working properly. A certain percentage of requests to the cache will have their cached value compared with the Source-of-Truth value. If there’s lots of differences for shadow mode requests, then you might want to double check what’s going on.

Observability and Cache Shadowing

With a company as large as DoorDash, it’s obviously important to have some idea of how well the system is working.

They use a couple different metrics in their Caching layer to measure caching performance.

Cache Hit/Miss Ratio - The primary metric for analyzing performance is looking at how many cache requests are successfully answered without having to query the Source of Truth. Any cache misses (where the source of truth gets hit) will be logged.

Cache Freshness/Staleness - As mentioned above, DoorDash built in a Shadow Mode for requests. Requests with shadow mode turned on will also query the source-of-truth and compare the cached and actual values to check if they’re the same. Metrics with successful & unsuccessful matches are graphed and a high amount of cache staleness will send an alert to wake up a DoorDash engineer somewhere.

For more details, read the full blog post here.

How To Remember Concepts from Quastor Articles

We go through a ton of engineering concepts in the Quastor summaries and tech dives.

In order to help you remember these concepts for your day to day job (or job interviews), I’m creating flash cards that summarize these core concepts from all the Quastor summaries and tech dives.

The flash cards are completely editable and are meant to be integrated in a Spaced-Repetition Program (Anki) so that you can easily remember the concepts forever.

This is an additional perk for Quastor Pro members in addition to the weekly tech dive articles.

Quastor Pro is only $12 a month and you should be able to expense it with your job’s learning & development budget. Here’s an email you can send to your manager. Thanks for the support!

Tech Snippets

How to be the Mentor You Wish You Had

Jordan Cutler is a Senior Software Engineer at Qualified and writes a fantastic newsletter on career advice for developers looking to level up faster.

If you want to go from a mid to a senior-level developer, then one of the key criterias is your ability to mentor junior developers on your team. Good mentorship can make the difference between a junior developer sticking around for 3 years at the company versus leaving after a 1 year stint.

In his post, Jordan delved into actionable things you can do to help your mentee with their goals and be that mentor that you wish you had.

P.S. - Jordan is also teaching a cohort-based course on growing from a mid-level to senior developer. He’s been kind enough to provide Quastor readers with a $50 off coupon.

Build a Career Growth Plan

Give your mentee clear and specific advice on how they can grow at the company.

You should help them

Review past feedback on how they could improve and give them actionable steps on what they need to change

Prioritize 3-5 skills they should focus on. Maybe they need to focus on their communication skills and learn how to effectively convey their contributions to the rest of the team. Or perhaps they’re struggling technically so you give them pointers how to improve their understanding of the codebase.

Set SMART (Specific, Measurable, Achievable, Relevant, Time-bound) goals for each focus area. Once you set these goals, bring up them up during weekly check-ins to see how they’re progressing and if they’re facing any blockers.

Don’t Jump to Answers

When your mentee is expressing issues they’re facing at work, a bad way to respond is to jump in with solutions.

Instead, you should spend more time prodding, asking questions and making sure you understand exactly what difficulty they’re facing. By focusing on this, you’ll also help your mentee feel like his/her feelings are being validated.

After, you have a solid grasp of the context, then you can delve into advice.

When giving advice, some great ways to do so are

Using Personal Stories - This can help them piece together the lesson on their own rather than just being told what to do. It also helps make your advice more relatable since it shows that this is something you’ve dealt with in the past.

Highlighting Tradeoffs - Another alternative to restate the decision/issue the mentee is facing and talk through their potential solutions. For each course of action, talk through the trade-offs and the pros/cons so they can arrive at their own conclusion.

Be Careful of Coming off as Judgemental

If you’re not careful with your language, it can be very easy to come off as judgemental.

You should avoid

Using “just” - Using just can come off as conedescending. “Can you just implement XYZ“ can make the task seem overly trivial.

Interrogative Questioning - If you ask questions in an interrogative manner (why didn’t you try XYZ?) this can put people on the defensive and potentially lead to an argument.

Being Overly Prescriptive - Try to find a balance between letting your mentee make their own decisions and “figure things out themselves” versus you advising them on what to do.

These are a couple of tips Jordan gives in his newsletter. For the full post, check it out here.